Step4: Create and Deploy Lambda Function, whenever a new file is uploaded into your S3 Bucket created at step1, the metadata of the file is reflected into the DynamoDB table (created at step3). The Lambda function uses the IAM Role (created at step2) to access S3 and DynamoDB. This scenario explains event-driven functionality of a Lambda function, when the file is uploaded in the S3 Bucket, the metadata of the file reflects in the DynamoDB Table. Lambda is a serverless, event-driven compute service that runs your code in response to events automatically. ### Make sure you have completed the below tasks before executing this scenario 1. Create S3 Bucket 2. Create IAM role for accessing S3 and DynamoDB 3. Create DynamoDB for the Lambda function.

-

1.

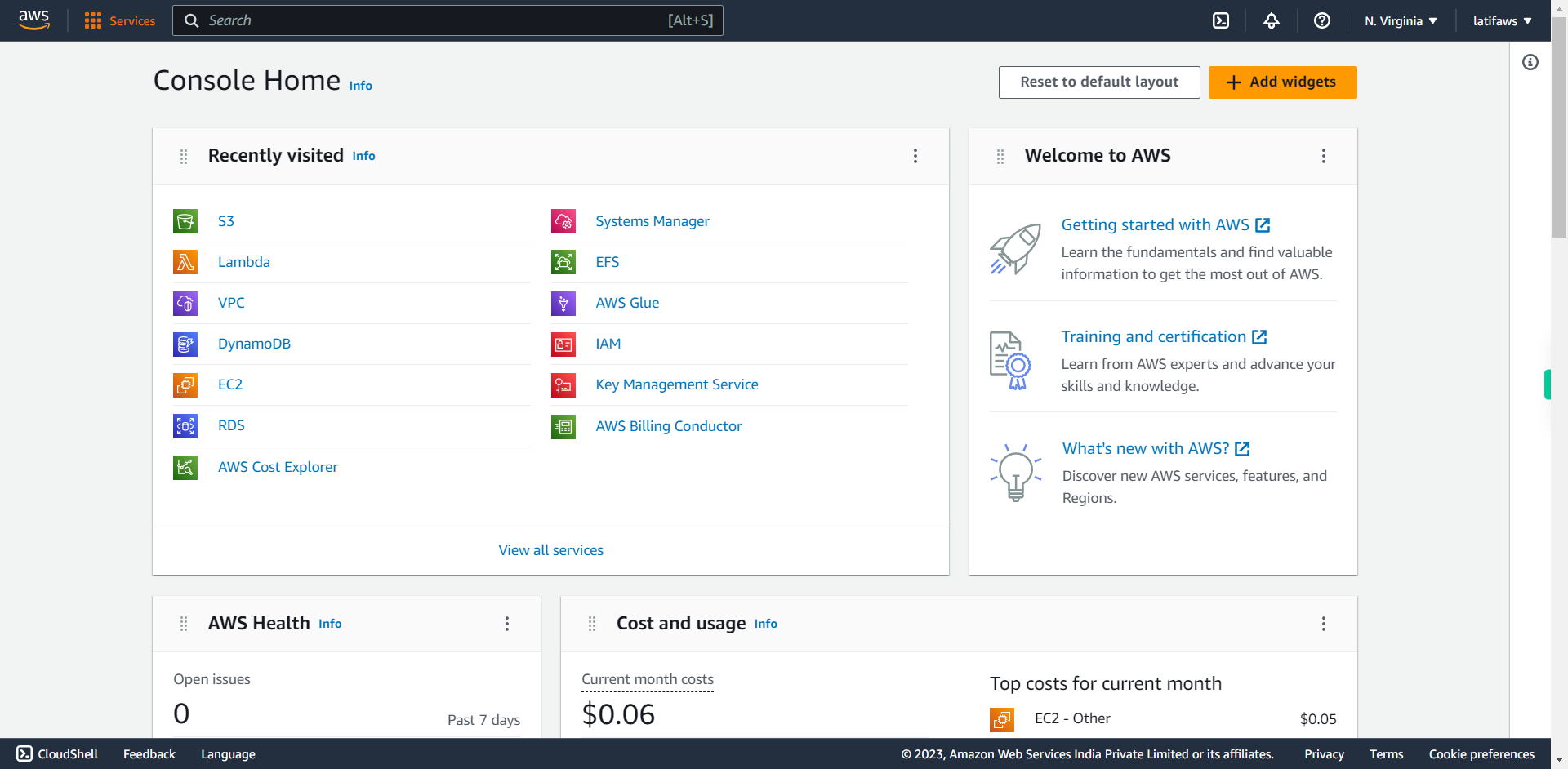

open AWS Management Console

-

2.

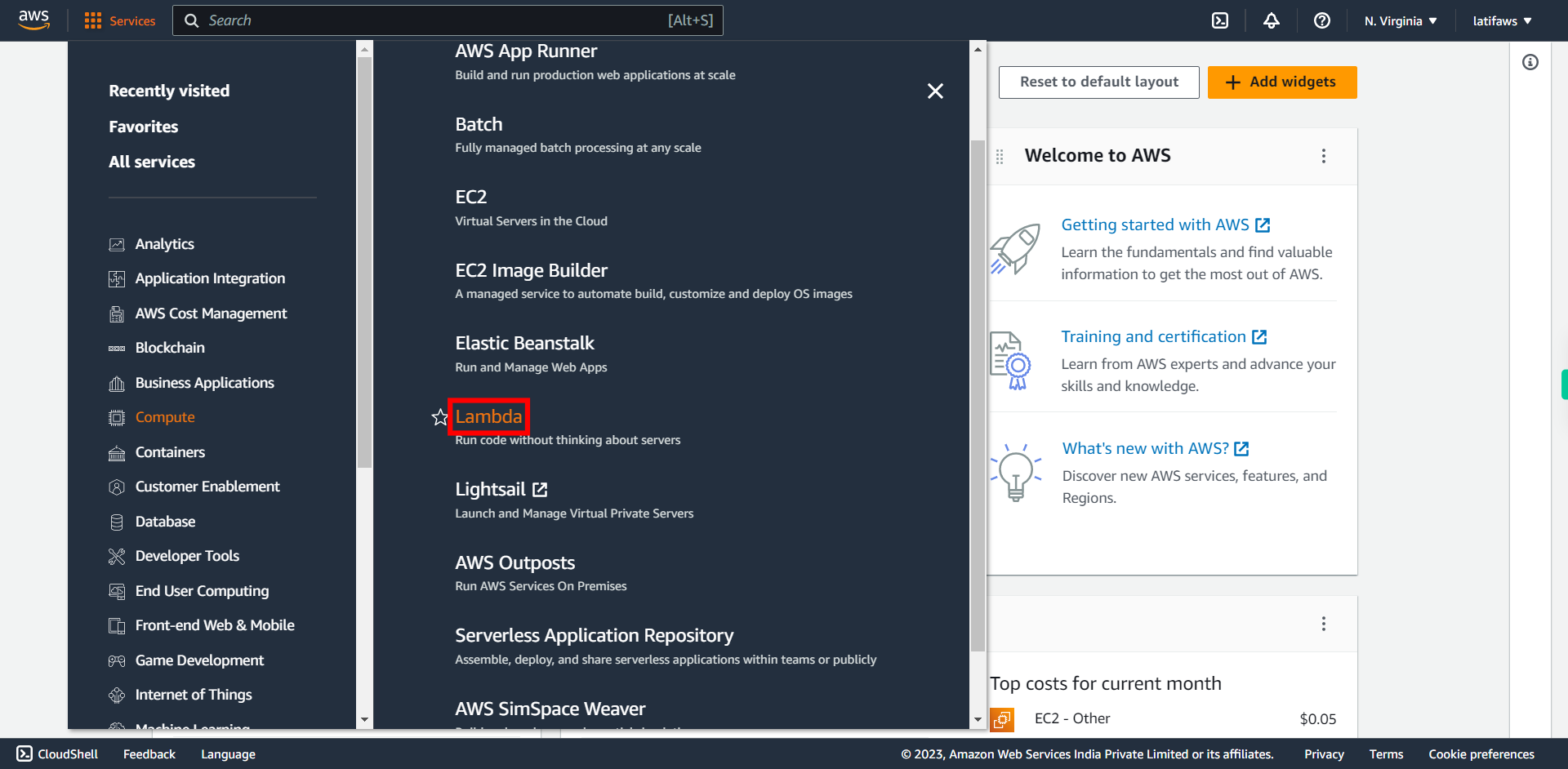

To create Lambda Function to reflect metadata of files uploaded into DynamoDB table. Follow the steps: Click "Services"

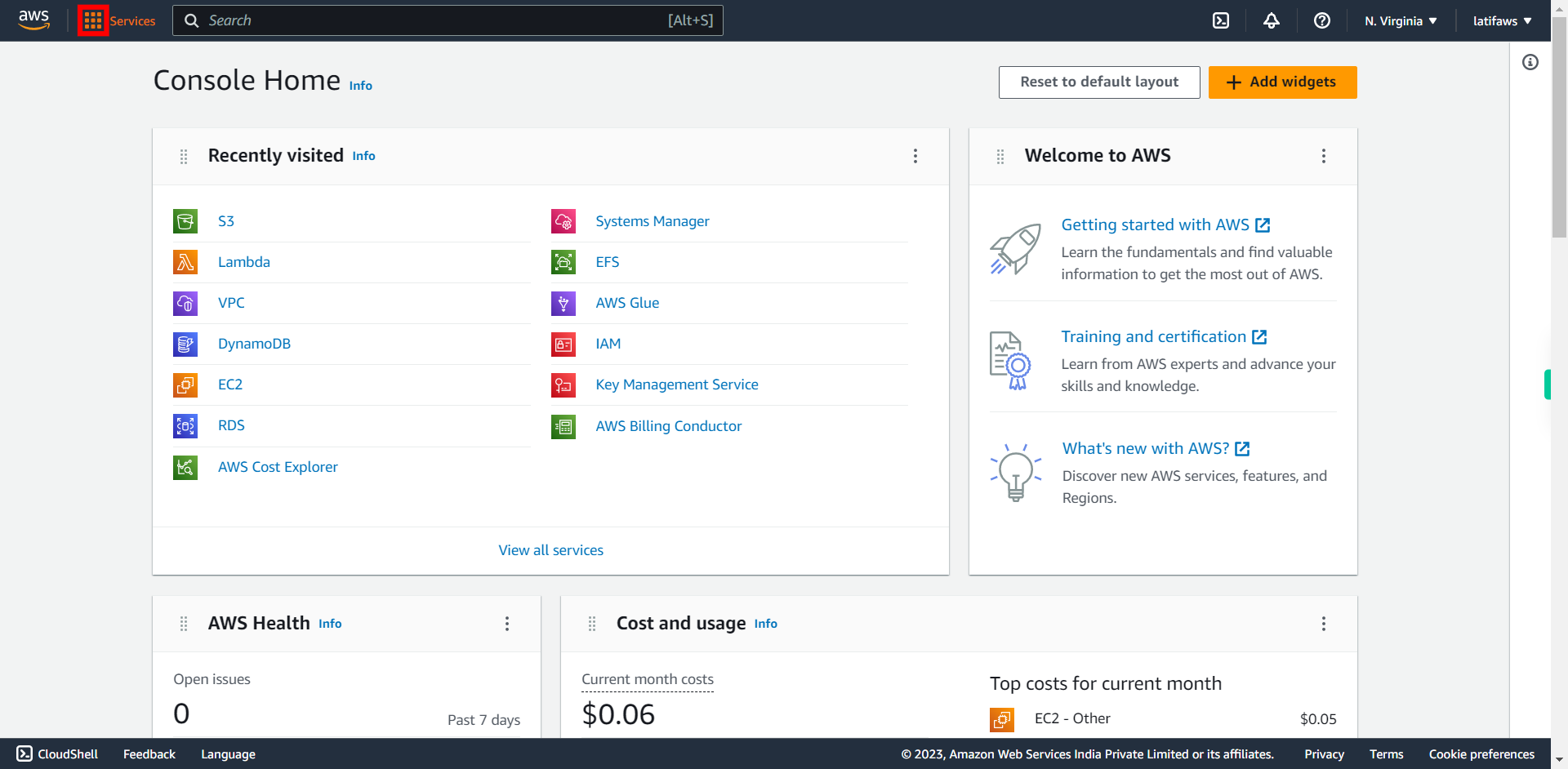

-

3.

Click "Compute"

-

4.

Click "Lambda" ### Lambda AWS Lambda is an event-driven, serverless computing platform. It is a computing service that runs code in response to events and automatically manages the computing resources required by that code. AWS Lambda is a compute service that lets you run code without provisioning or managing servers.

-

5.

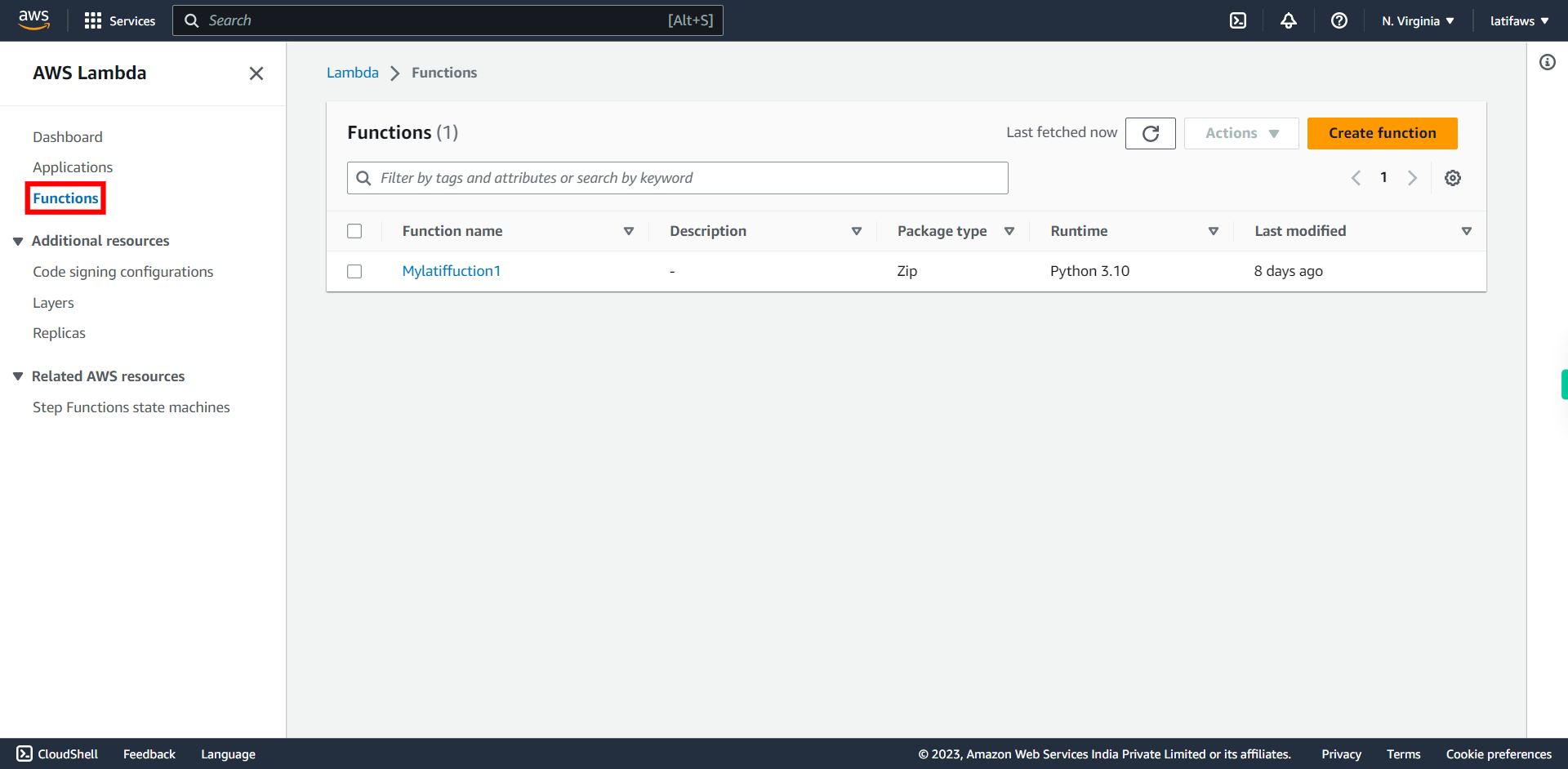

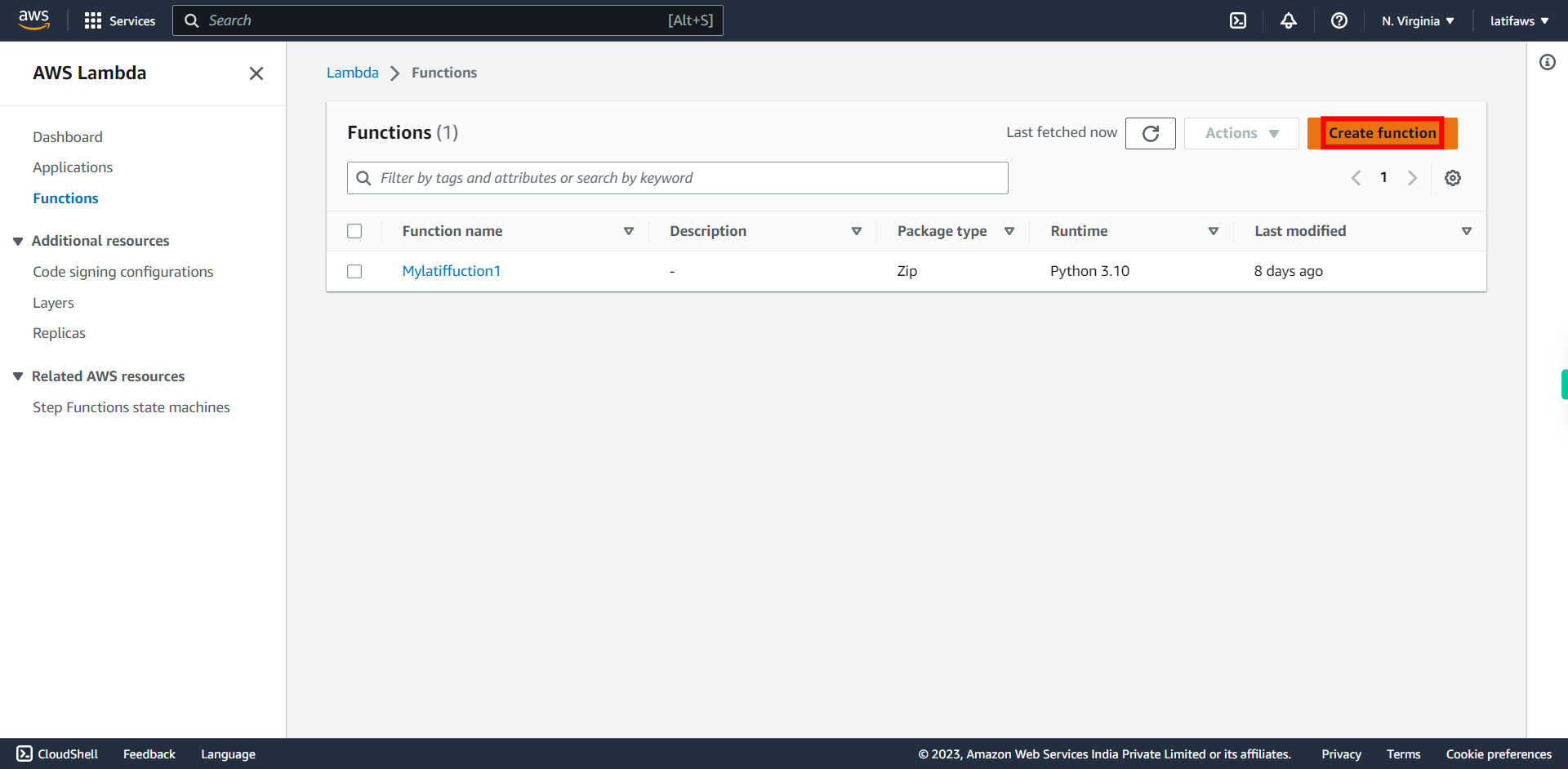

Click "Functions"

-

6.

Click "Create function"

-

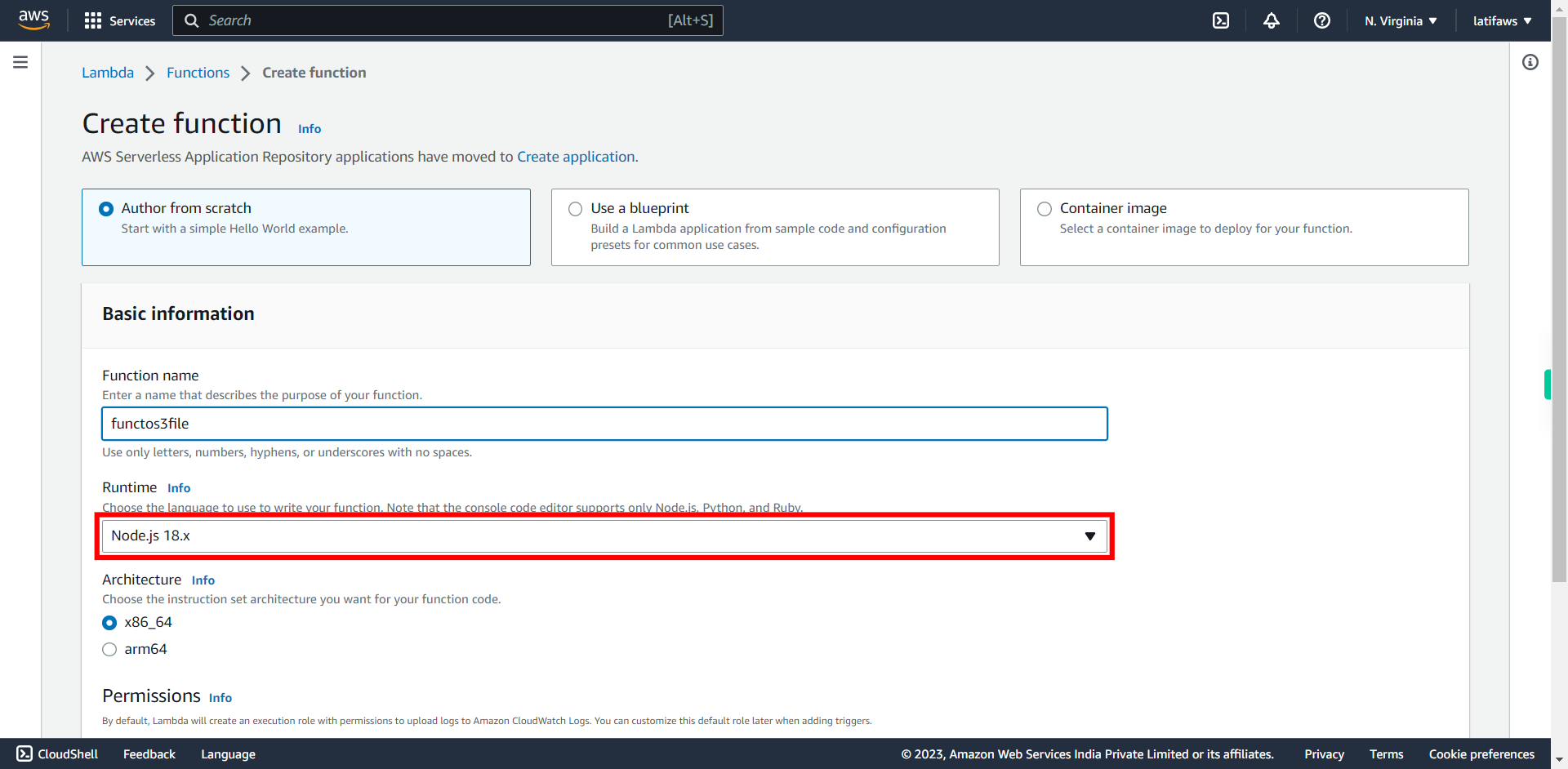

7.

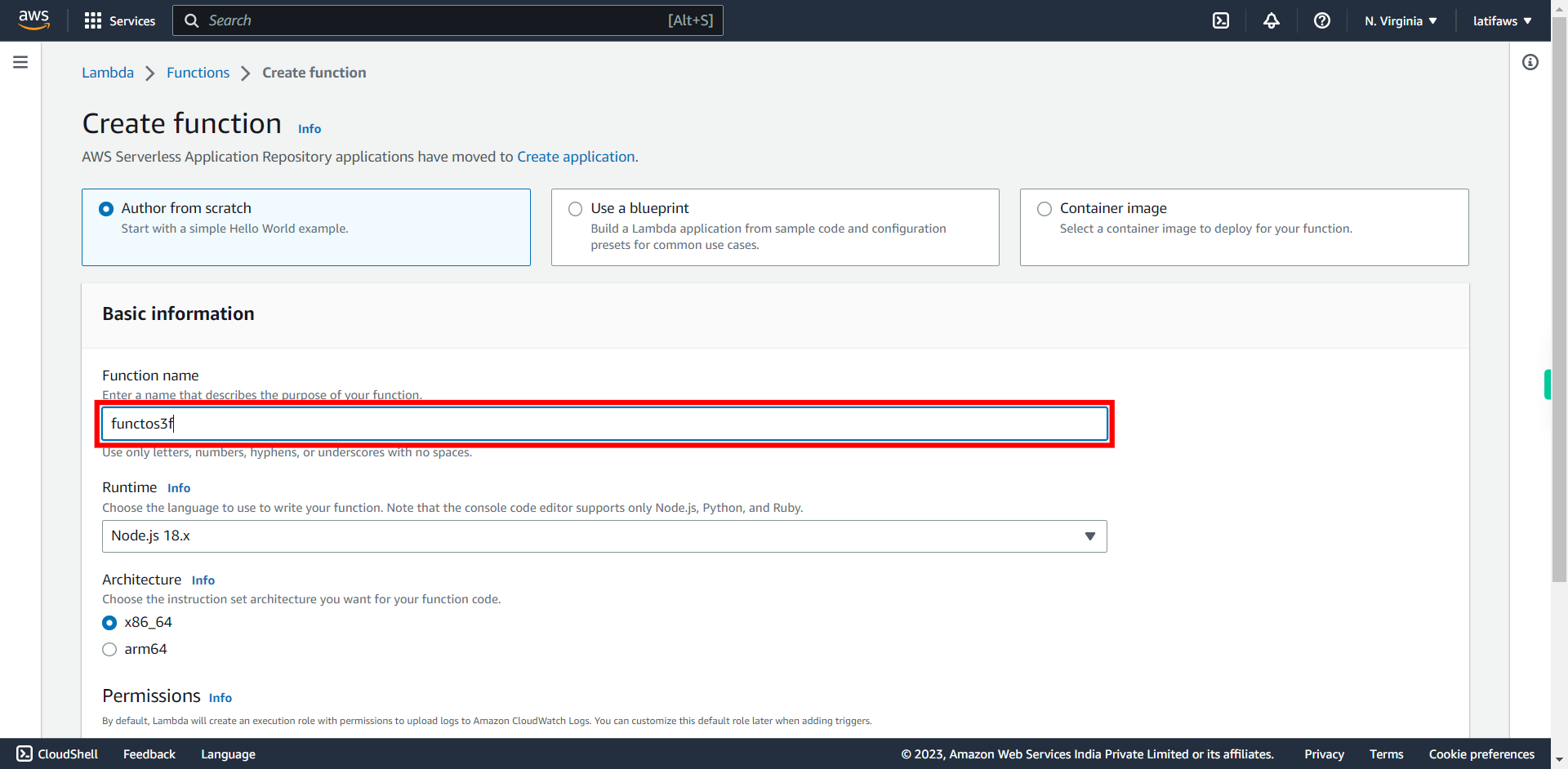

Type a name for Lambda function and click Next to continue. Ex. mys3todynamodbfunction

-

8.

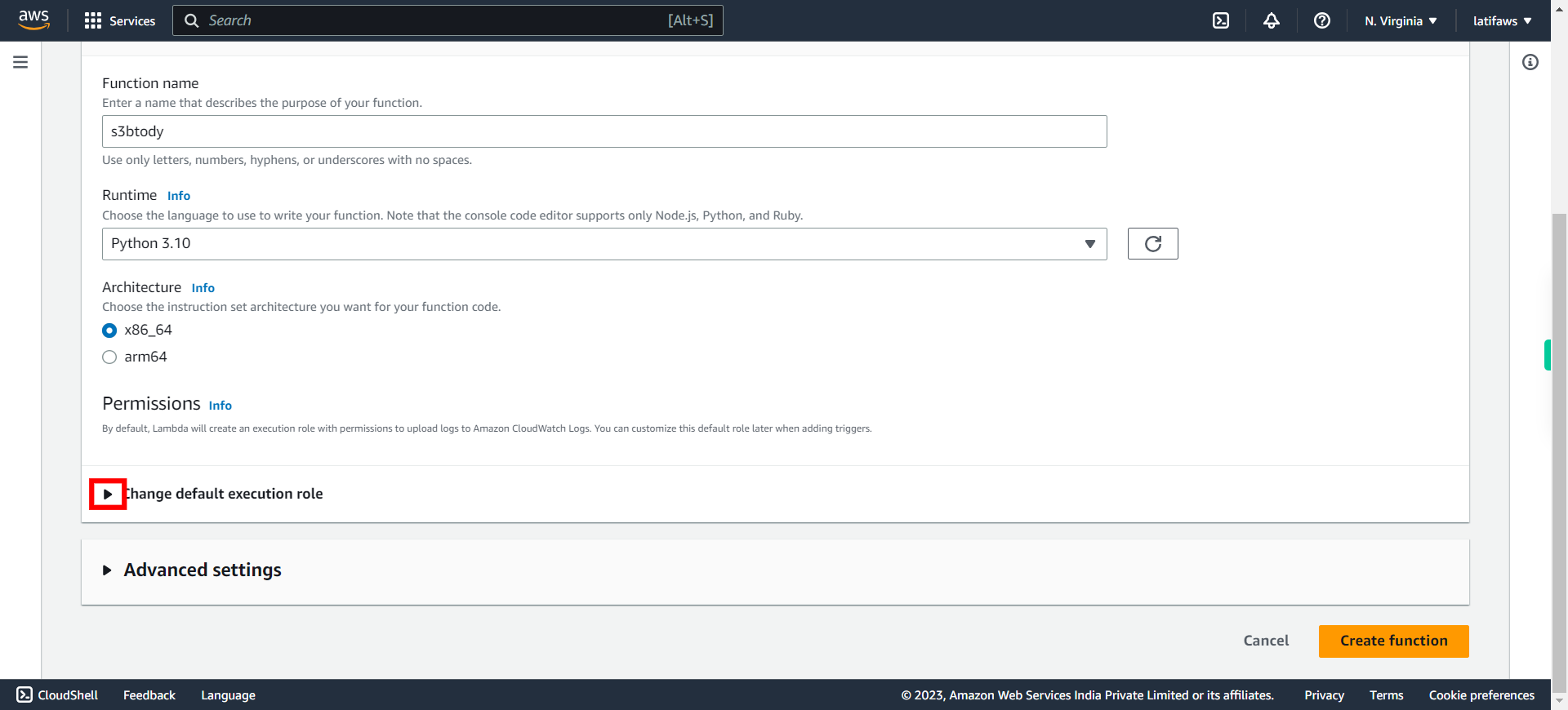

Select 'Python 3.10' as Runtime and click Next to continue.

-

9.

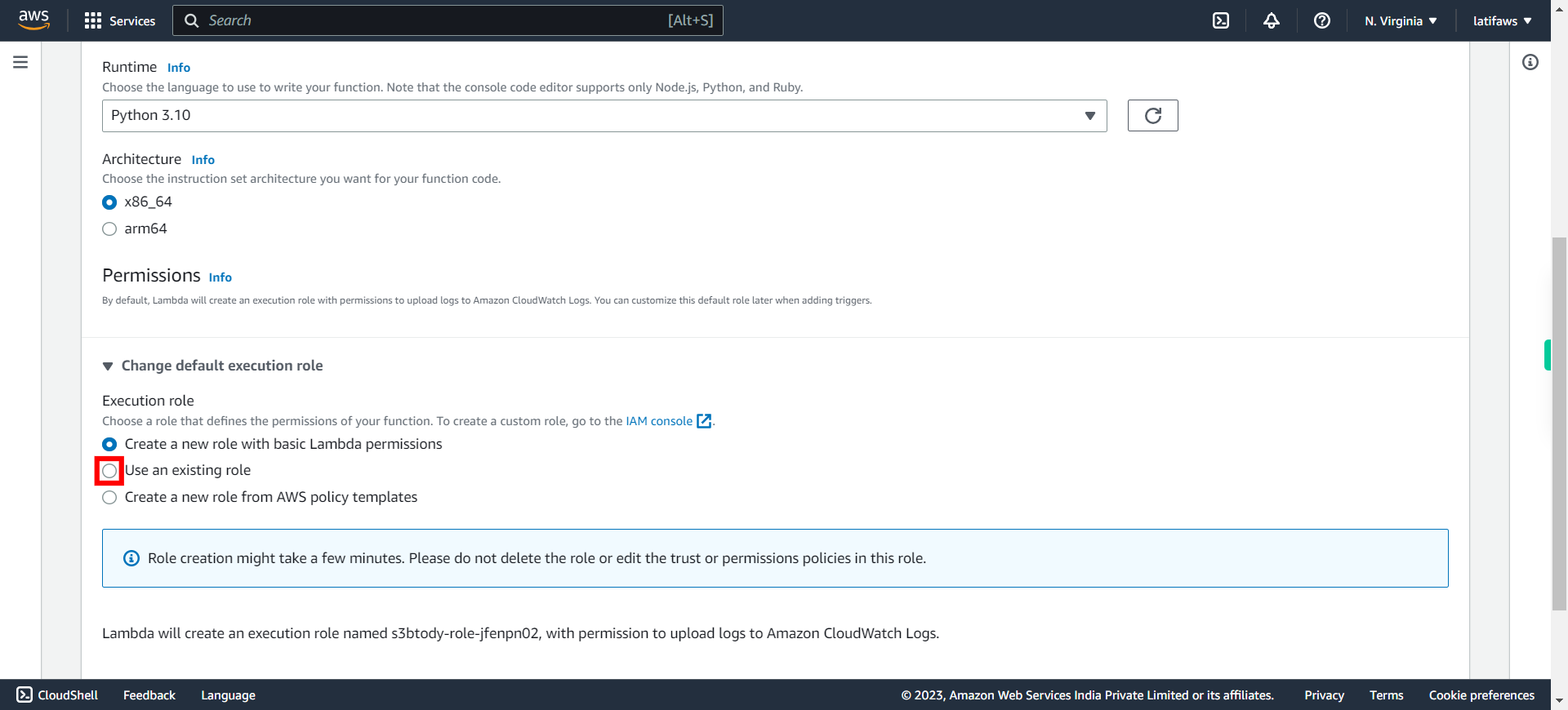

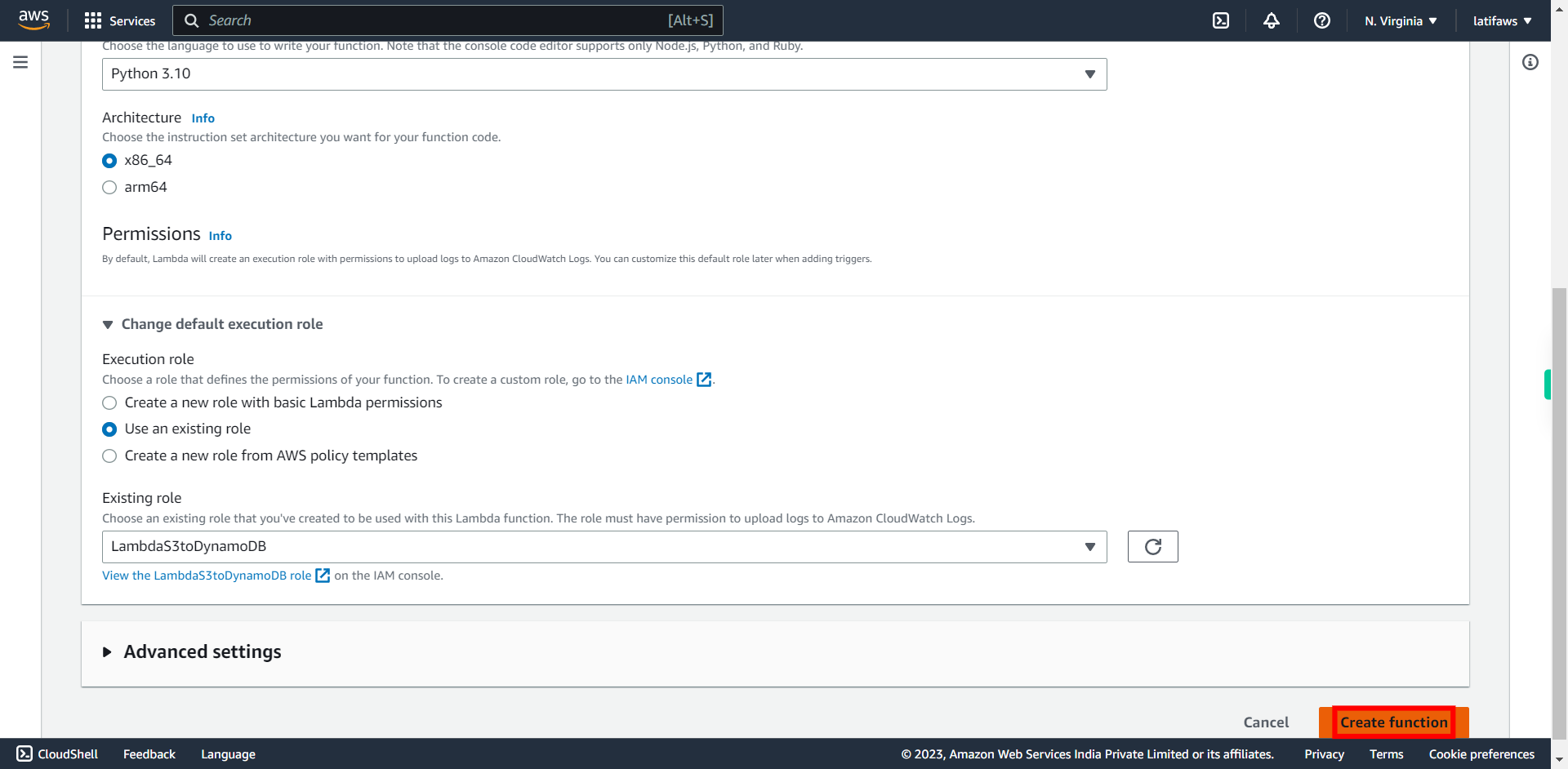

Expand "Change default execution role"

-

10.

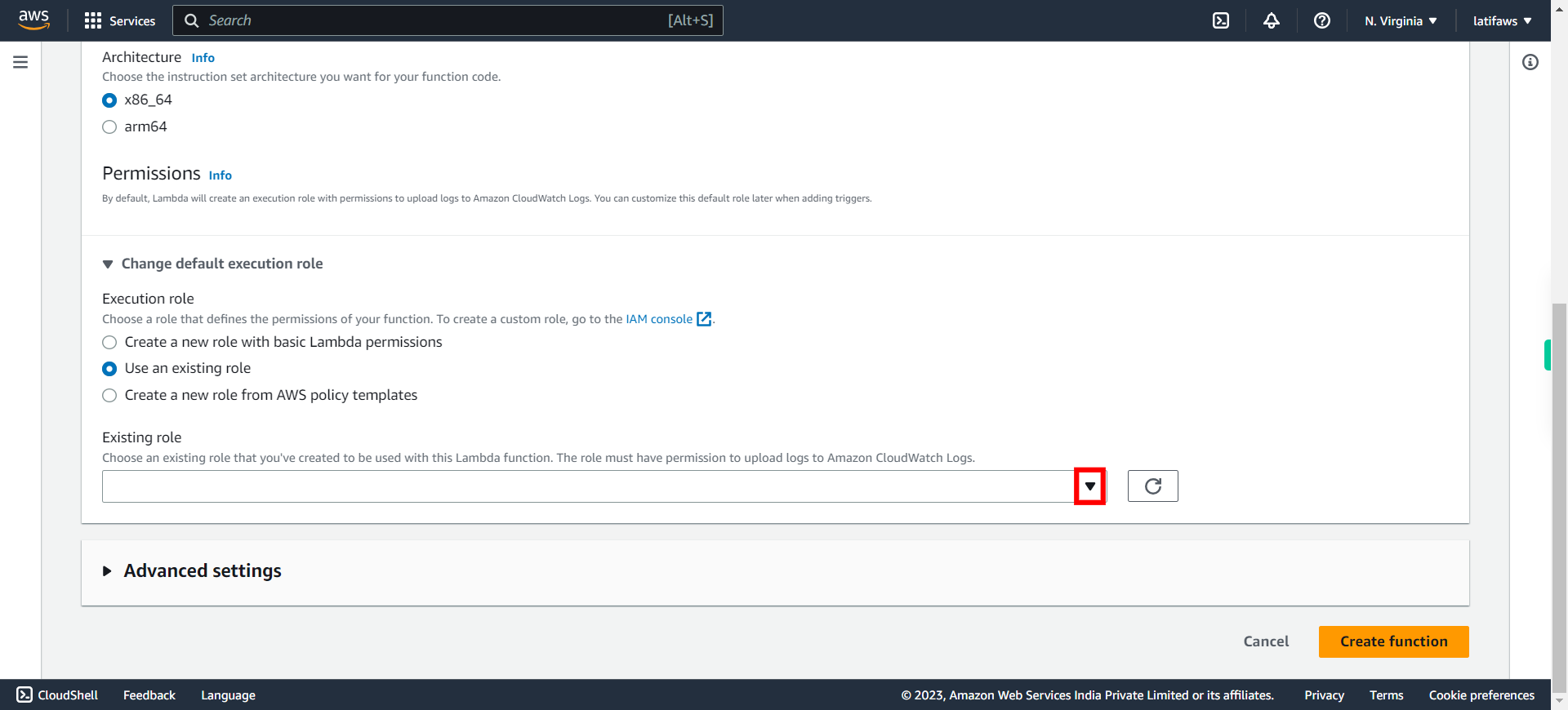

Select "Use an existing role" and click Next to continue.

-

11.

Click and Select the Existing role **which you created to access S3 and DynamoDB in Step2** Click Next to continue.

-

12.

Click "Create function" The lambda function will be created.

-

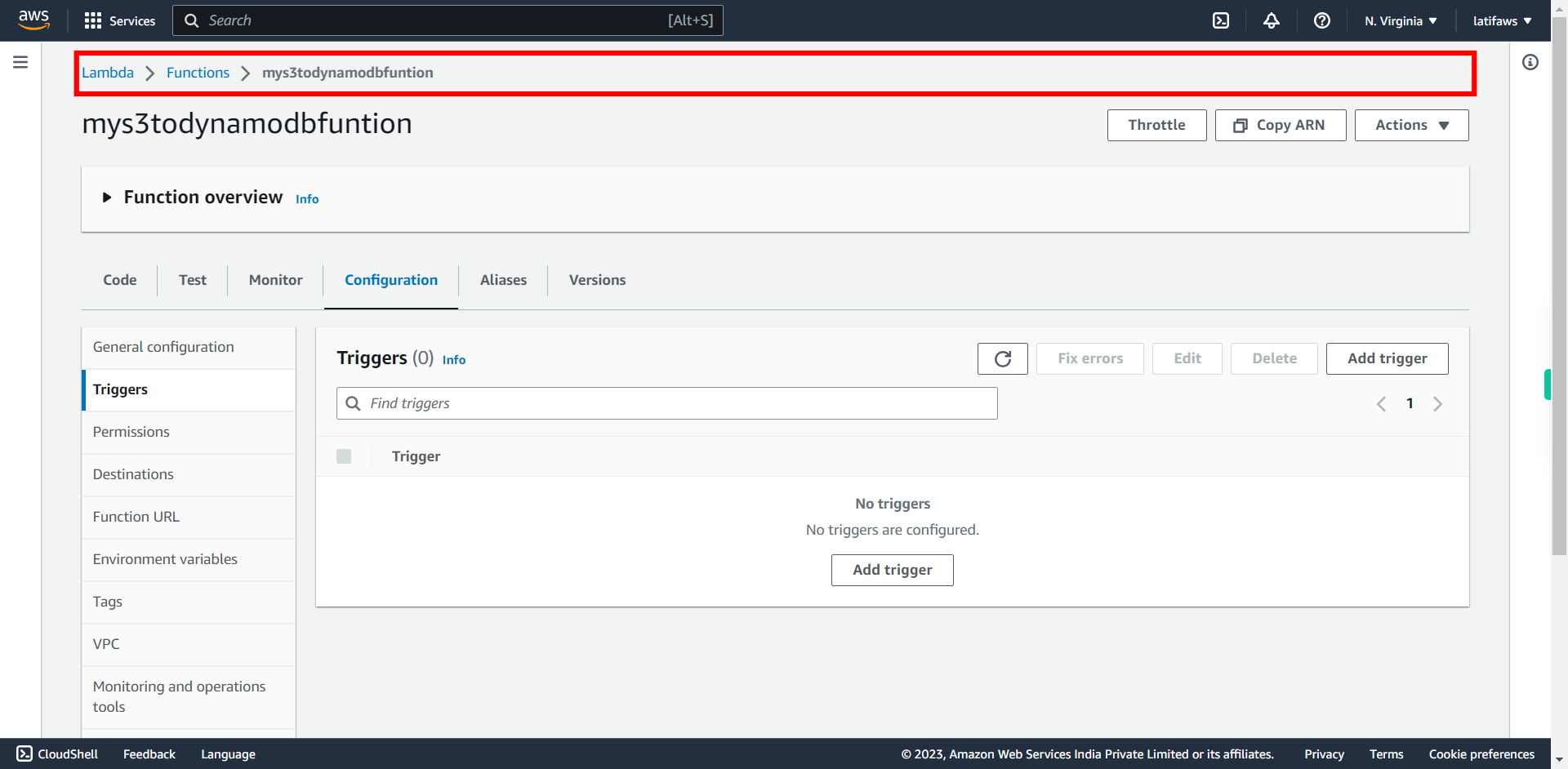

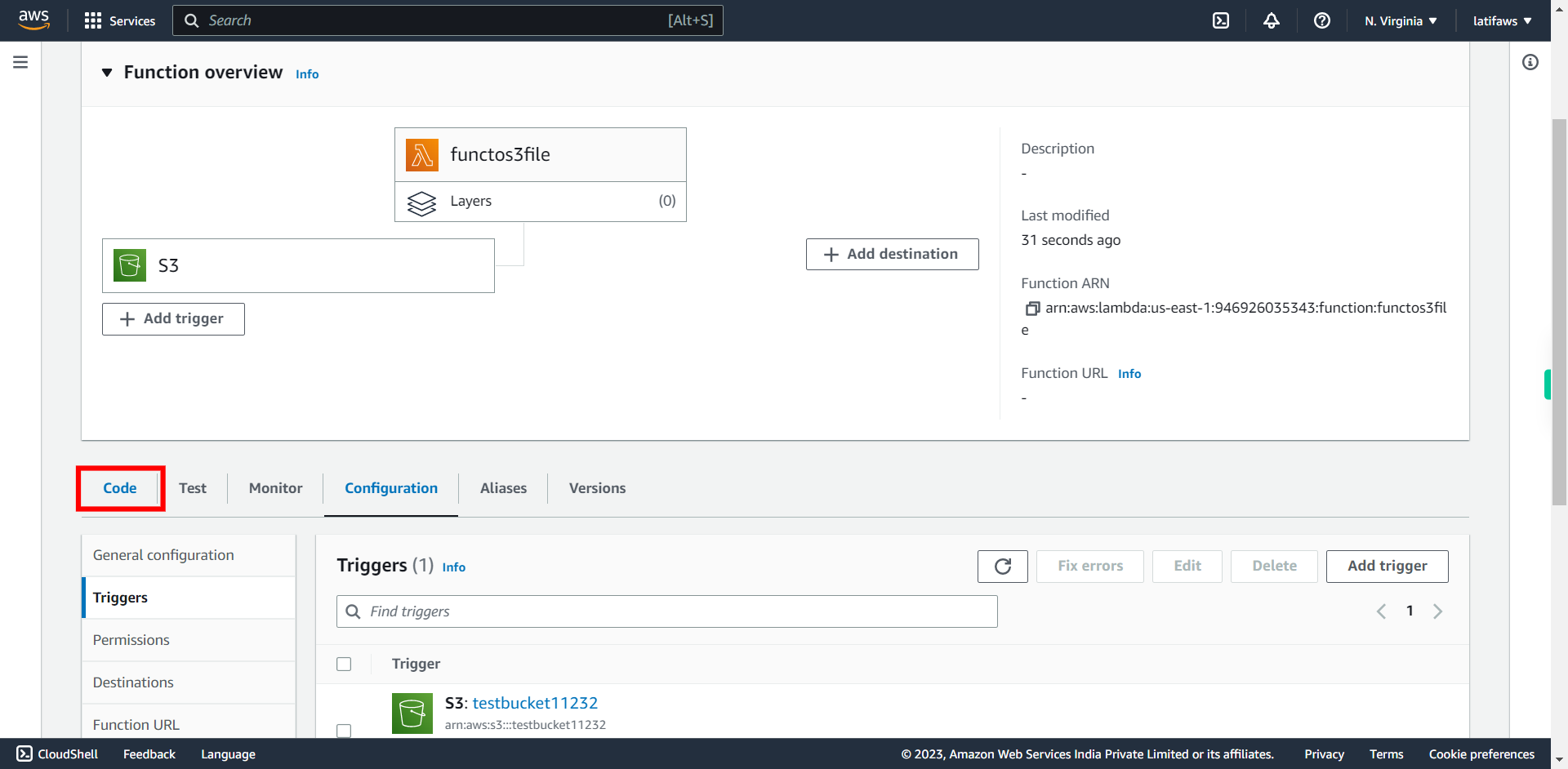

13.

Expand - Function Overview to Add Trigger , Destination and Layers. **Here we use only "Add Trigger"** Click Next to continue. ### Function configuration Use the function overview to see triggers, layers, and destinations to your function. You can see the following types of resources in the visualization: Triggers, Destinations and Layers

-

14.

Now we have to add your S3 Bucket (created in Step1) as trigger. Click "Add trigger"

-

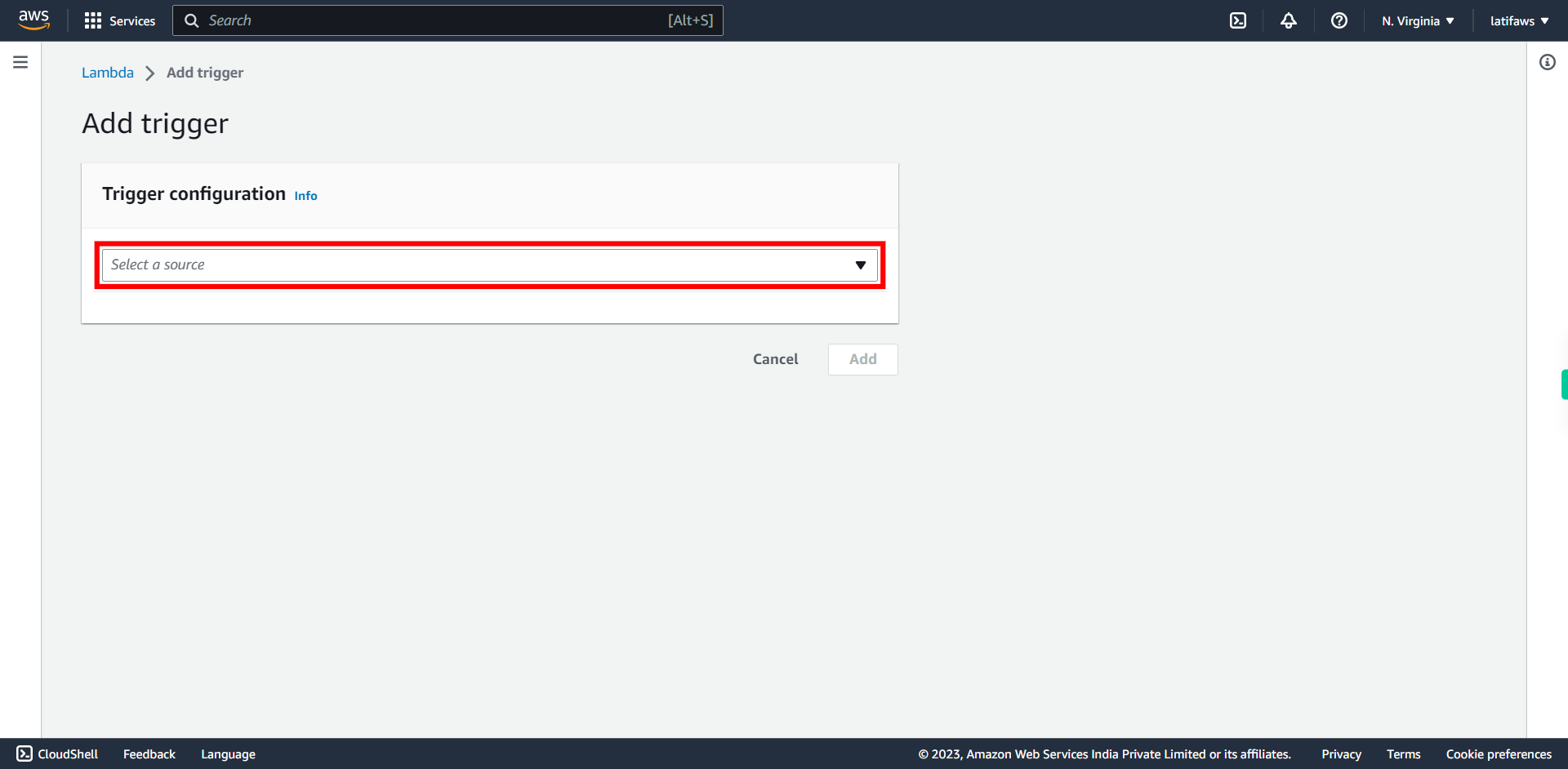

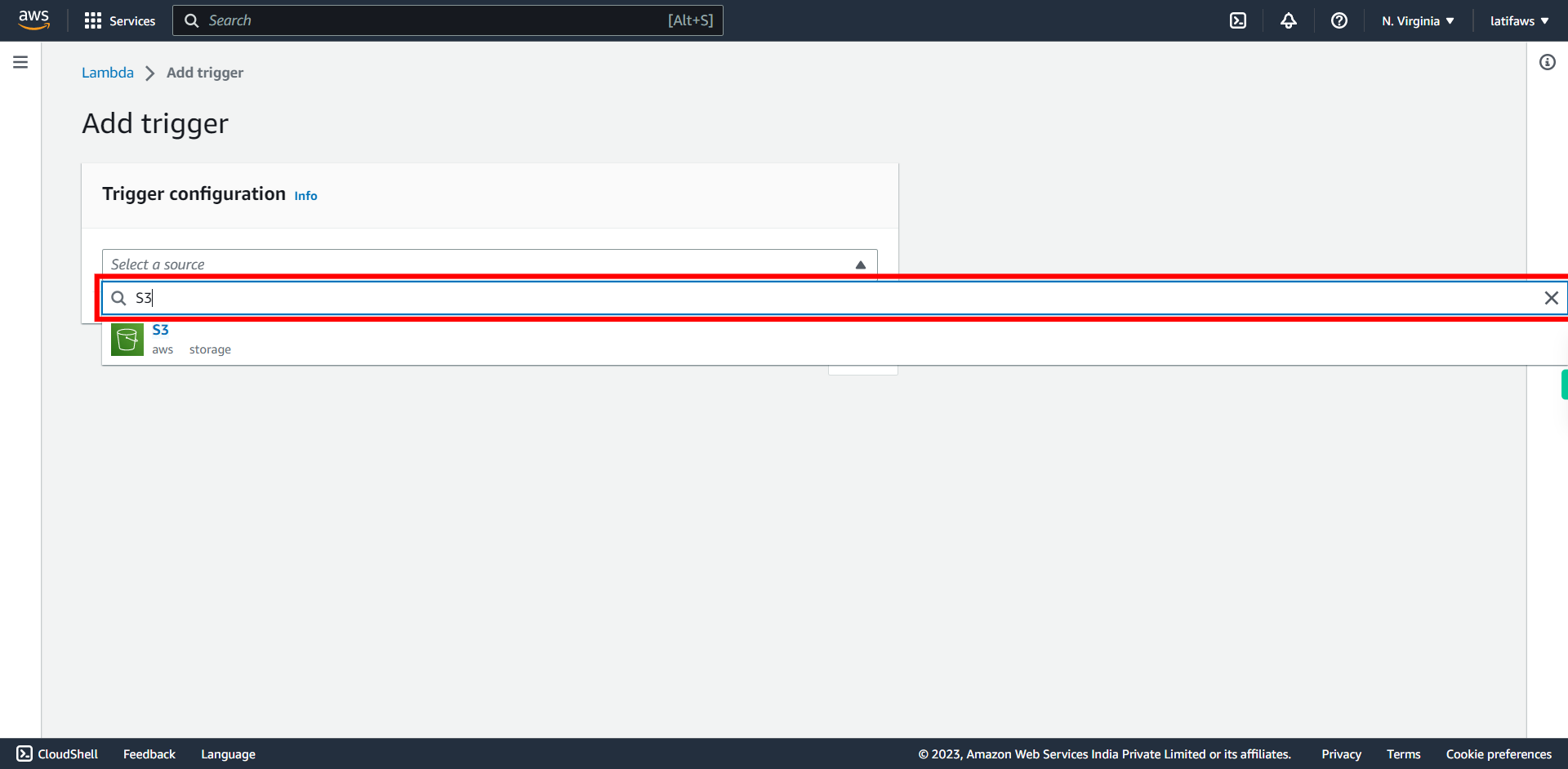

15.

Click "Select a source" under Trigger Configuration. ### Triggers A trigger is a service or resource that invokes your function. You can add triggers for existing resources only. When you add a trigger, the console configures the resources and permissions required to use it.

-

16.

Type: **S3**, Press Next in the Supervity Instruction Widget and Select **S3**.

-

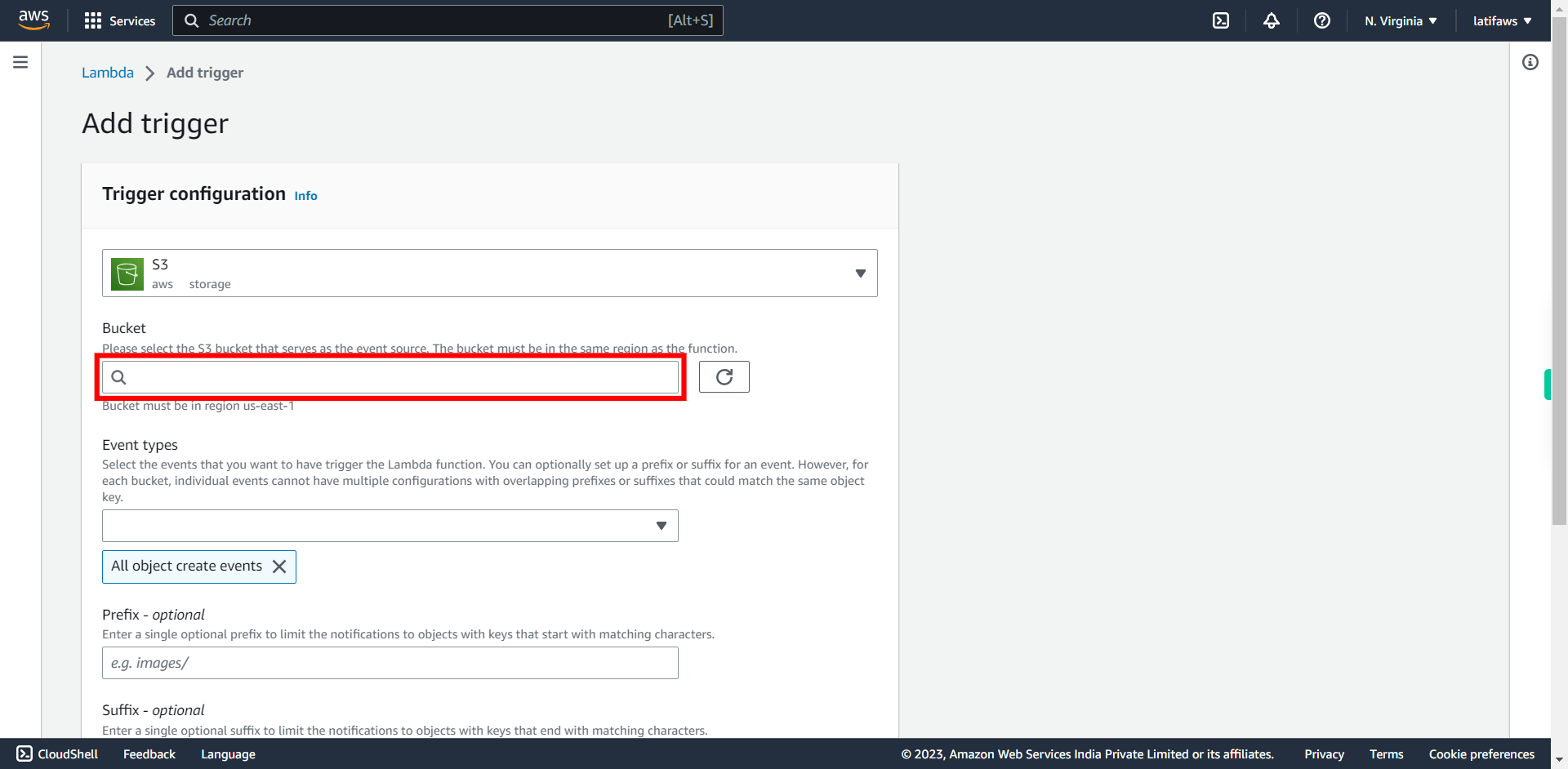

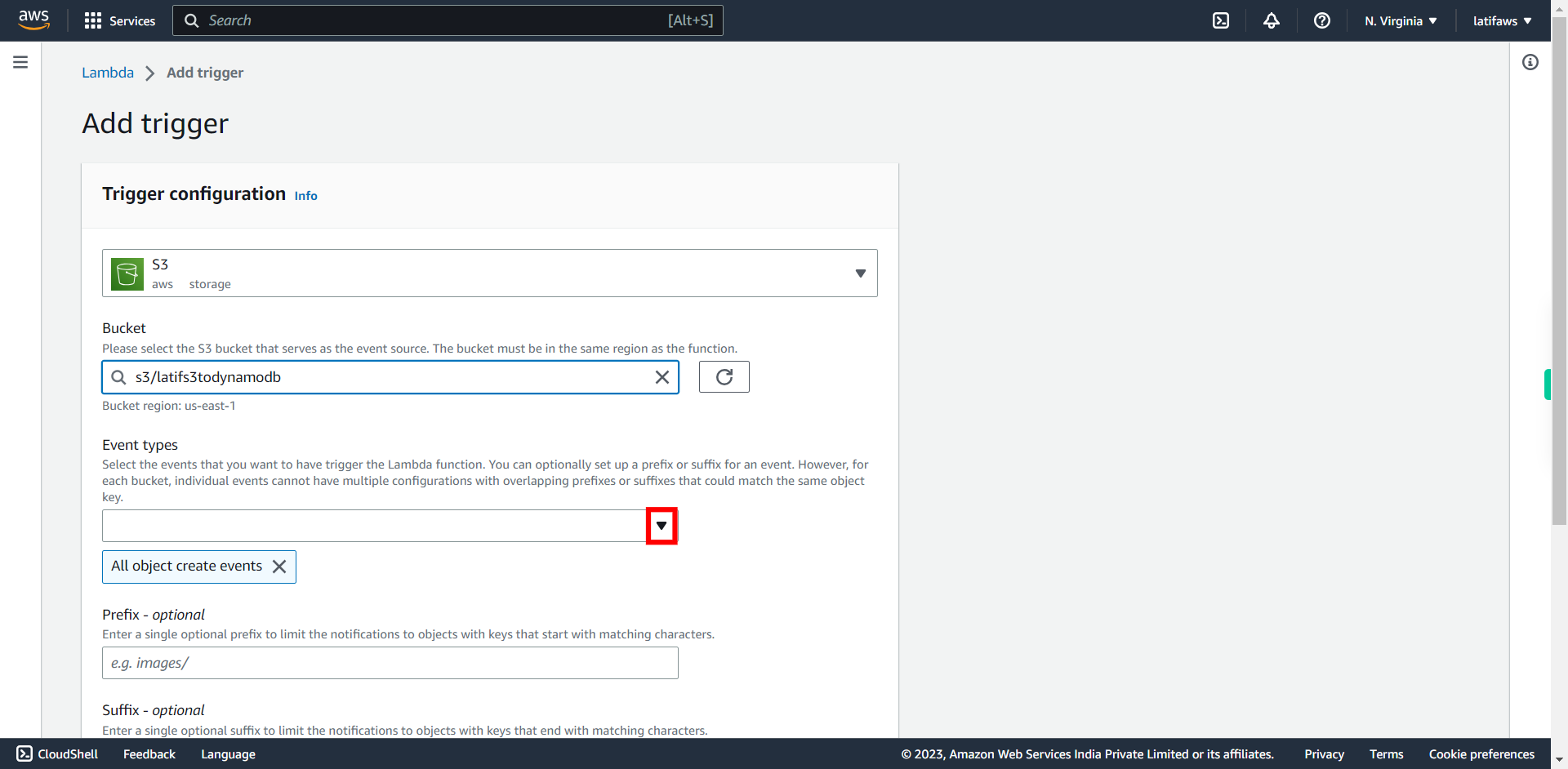

17.

Click "Bucket" and Select **your Bucket from the List** which you created in Step1 for this scenario. Click Next to continue. ### Note: If bucket list is not showing , refresh the page and try again.

-

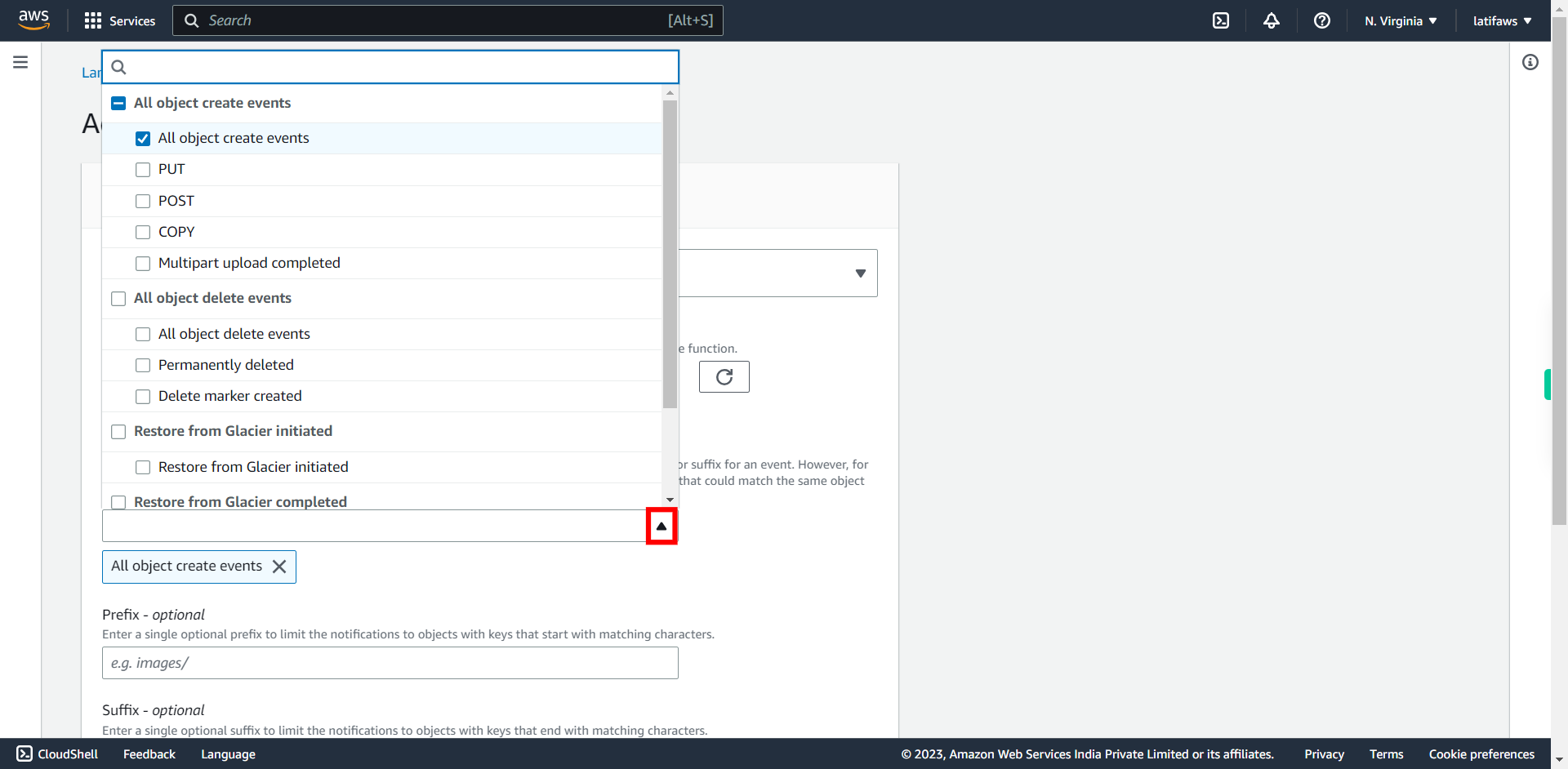

18.

To select the events that you want Lambda function to trigger, Click "Event types"

-

19.

Make sure all events under **All object create events** are selected. Click Next to continue.

-

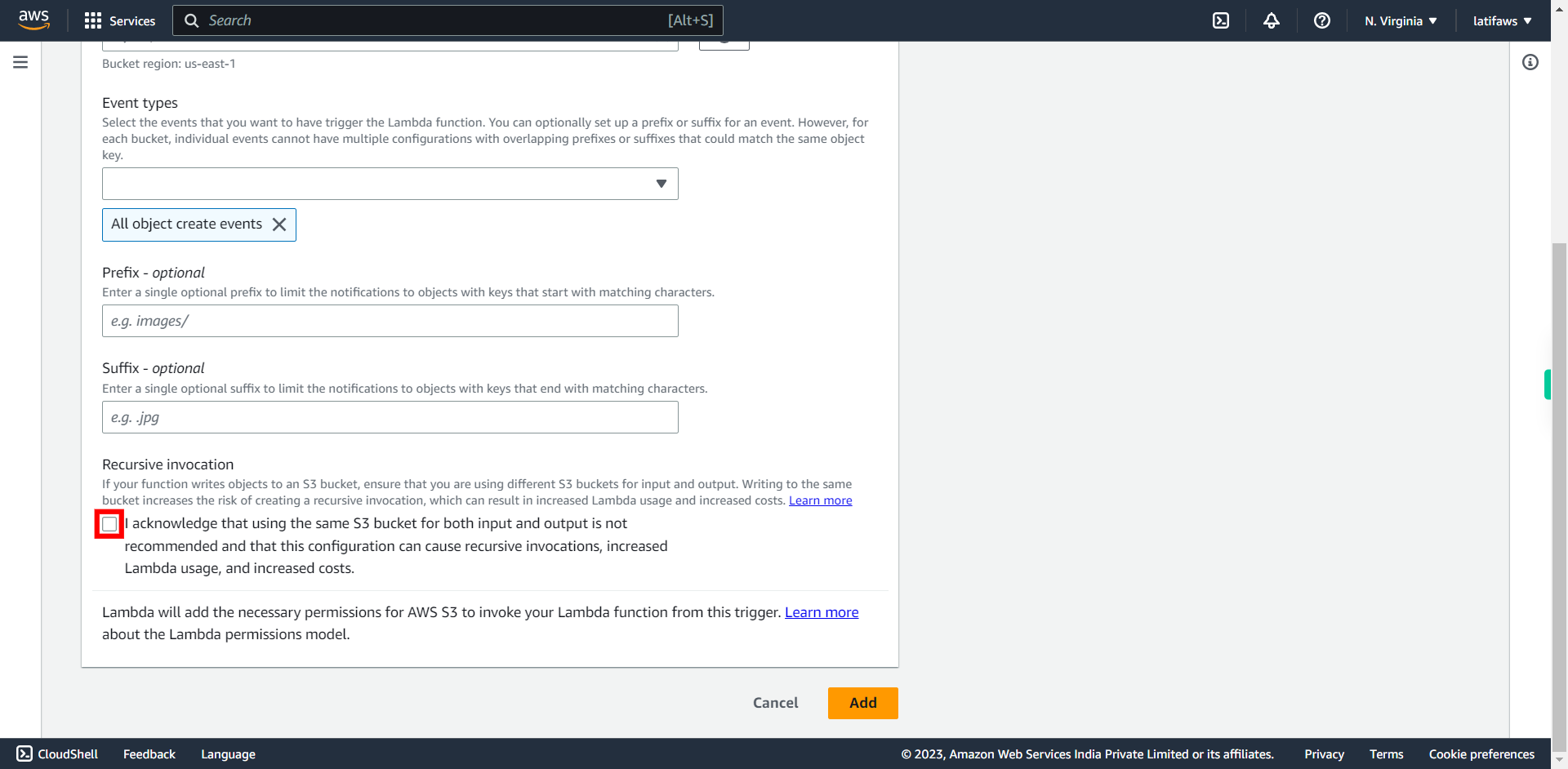

20.

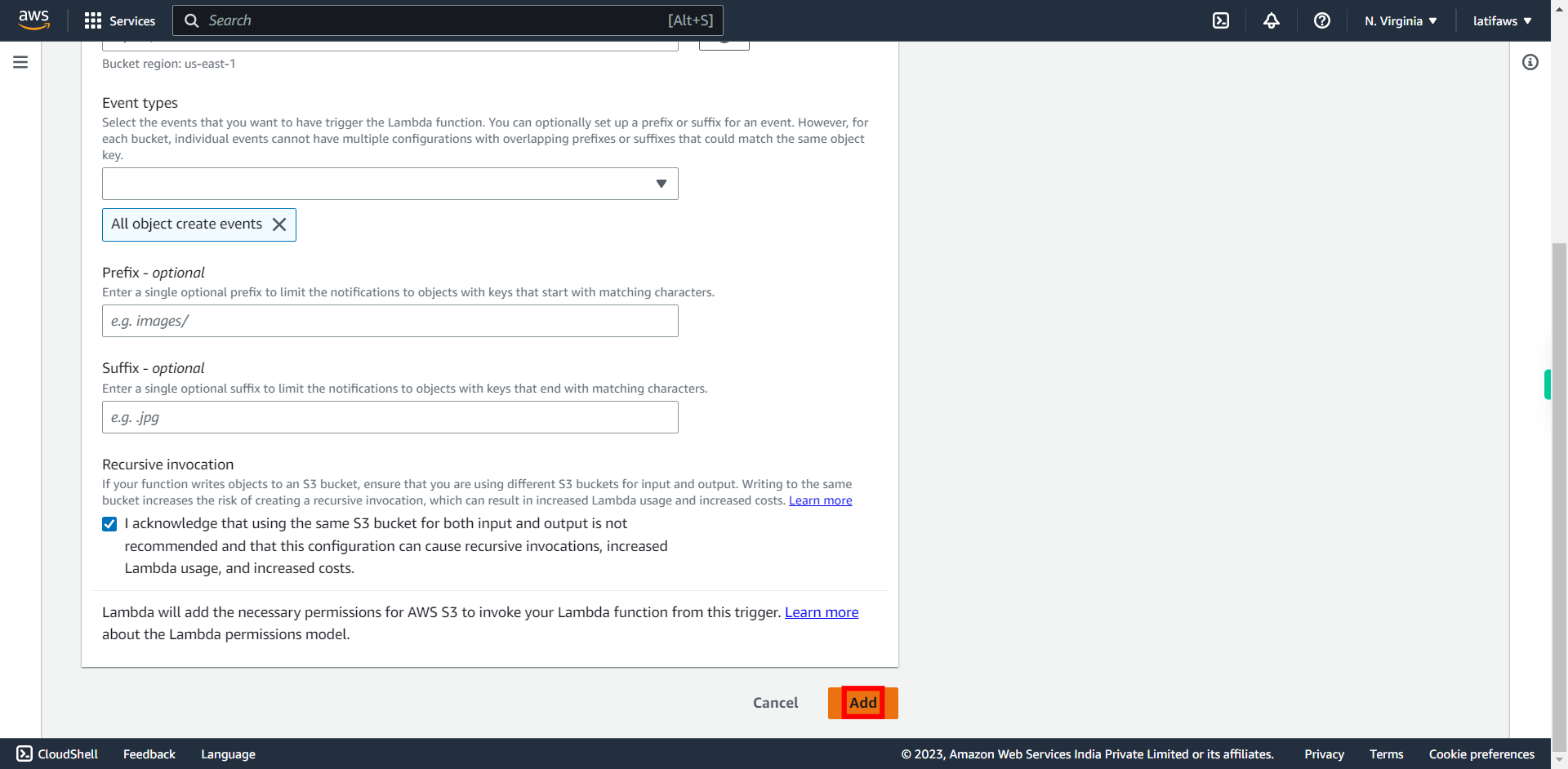

Select "I acknowledge..," I acknowledge that using the same S3 bucket for both input and output is not recommended and that this configuration can cause recursive invocations, increased Lambda usage, and increased costs. Click Next to continue.

-

21.

Click "Add" Now with this the S3 Bucket trigger information will be added

-

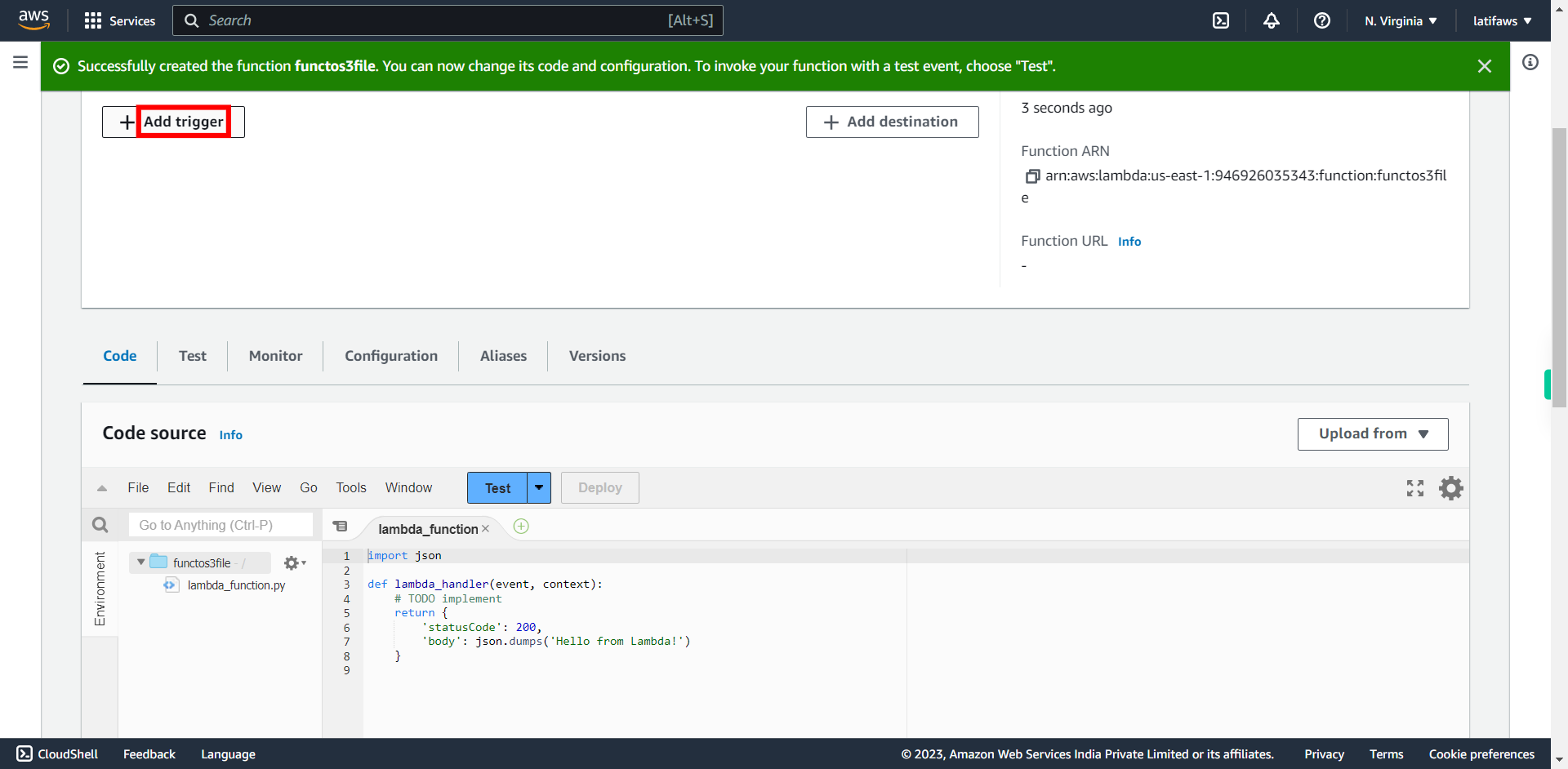

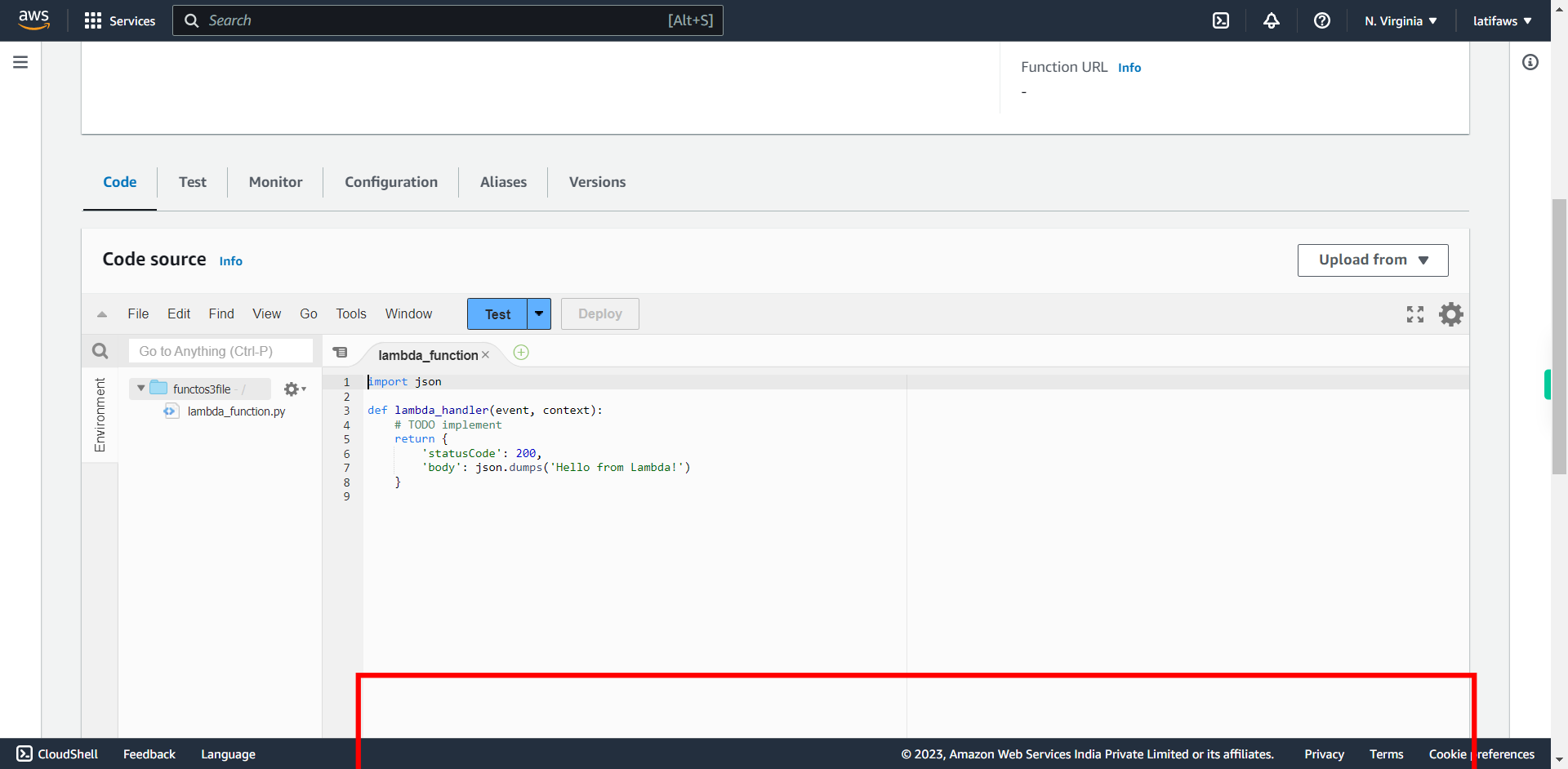

22.

Click "Code"

-

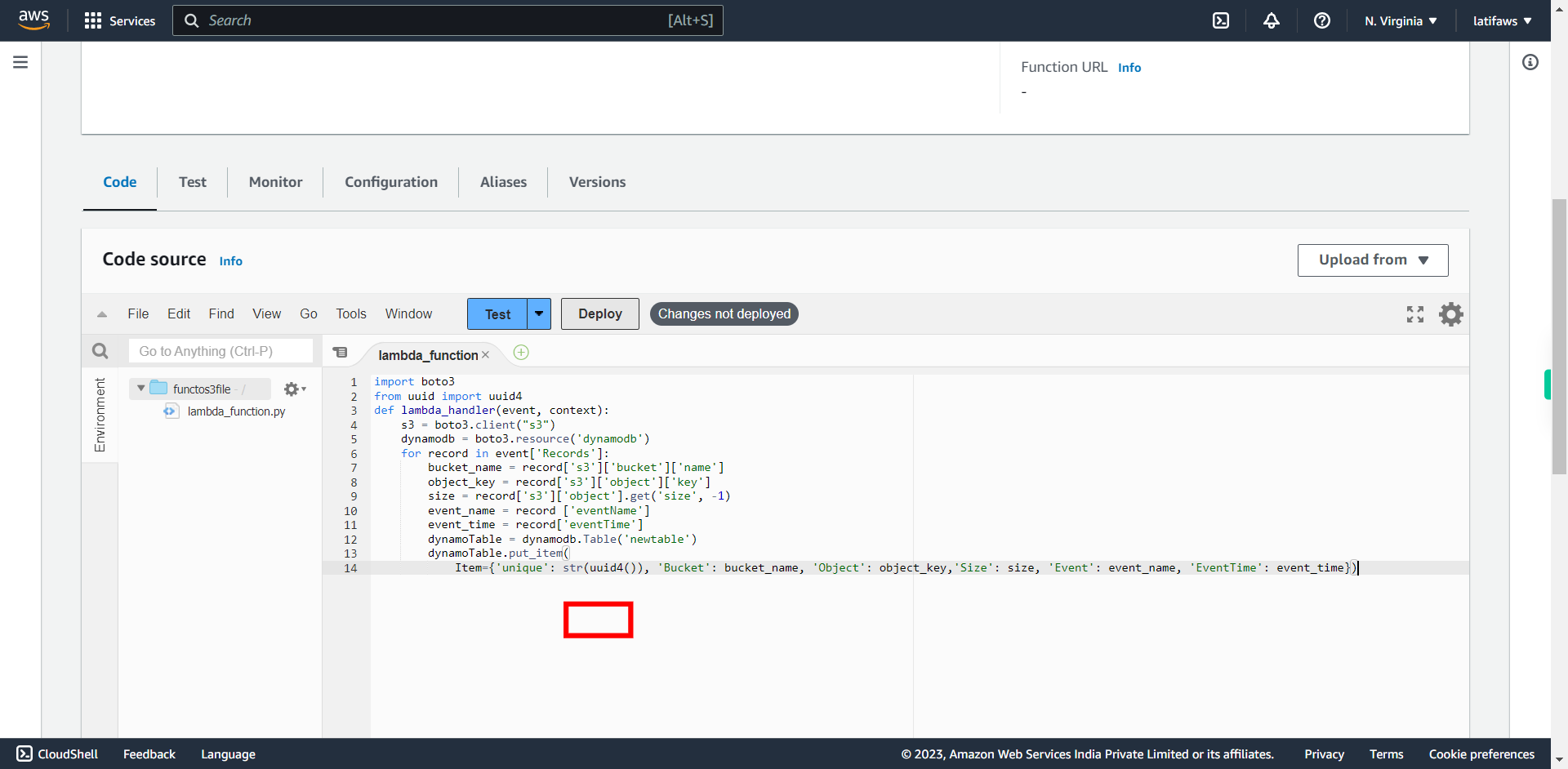

23.

Remove the existing Code and Paste the code given below. Once done, click Next to continue. ``` import boto3 from uuid import uuid4 def lambda_handler(event, context): s3 = boto3.client("s3") dynamodb = boto3.resource('dynamodb') for record in event['Records']: bucket_name = record['s3']['bucket']['name'] object_key = record['s3']['object']['key'] size = record['s3']['object'].get('size', -1) event_name = record ['eventName'] event_time = record['eventTime'] dynamoTable = dynamodb.Table('newtable') dynamoTable.put_item( Item={'unique': str(uuid4()), 'Bucket': bucket_name, 'Object': object_key,'Size': size, 'Event': event_name, 'EventTime': event_time}) ```

-

24.

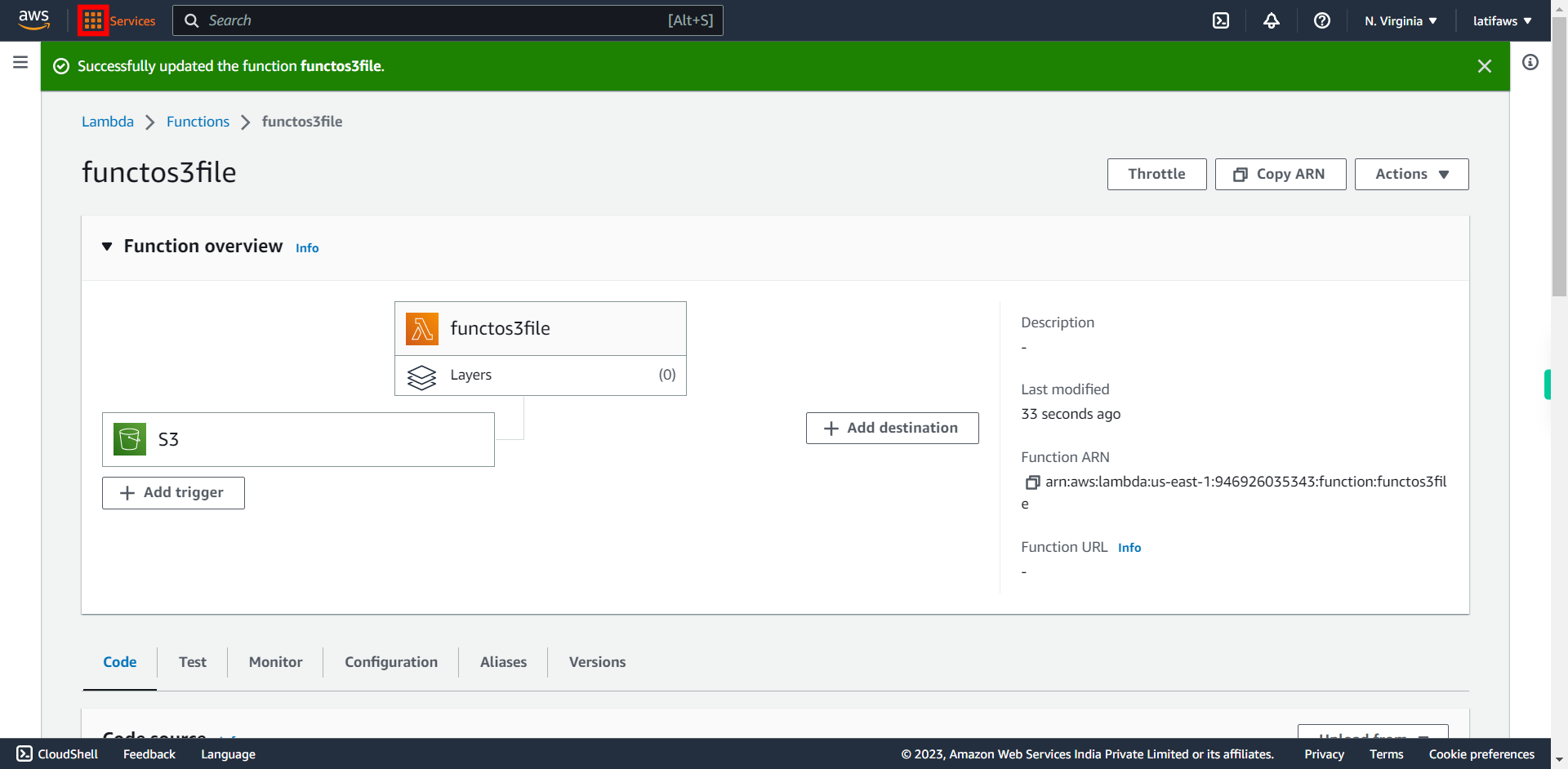

Click "Deploy" and click Next to continue. The code will be deployed. Now you have to verify our code by uploading a file in the S3 Bucket and check the uploaded file's metadata is reflecting DynamoDB table.

-

25.

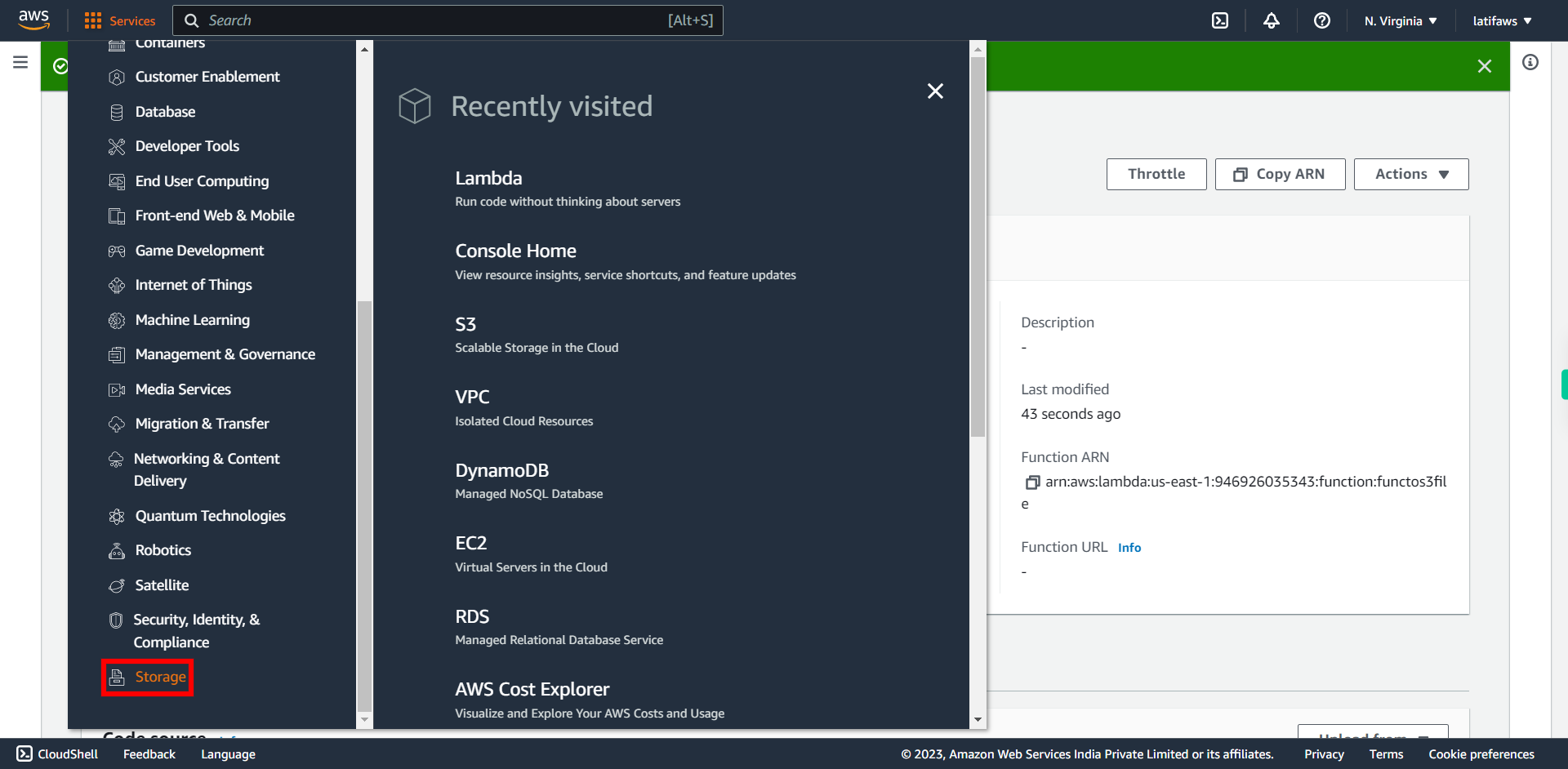

Now you have to upload a file into your S3 Bucket. Click "Services"

-

26.

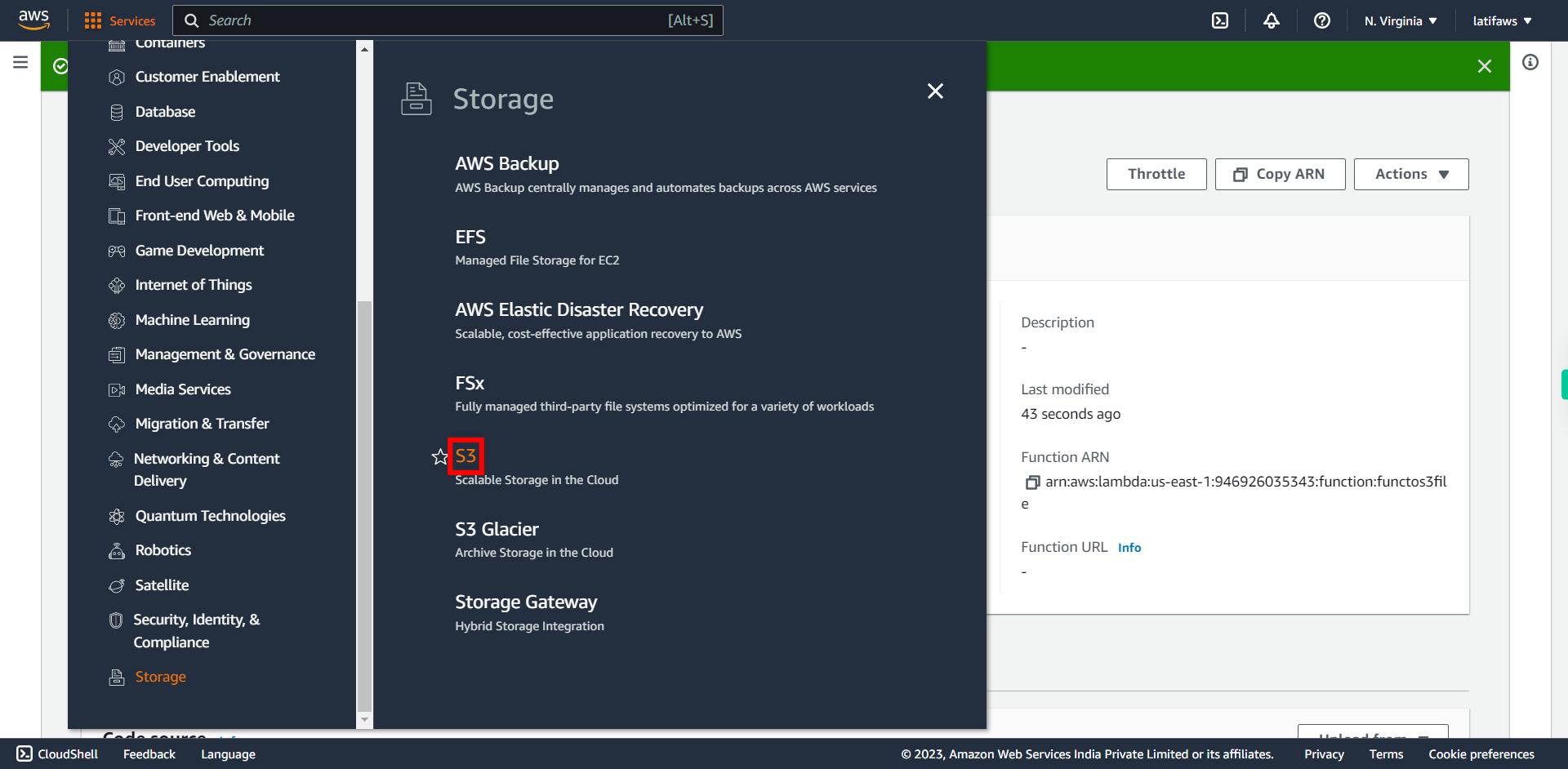

Click "Storage"

-

27.

Click "S3"

-

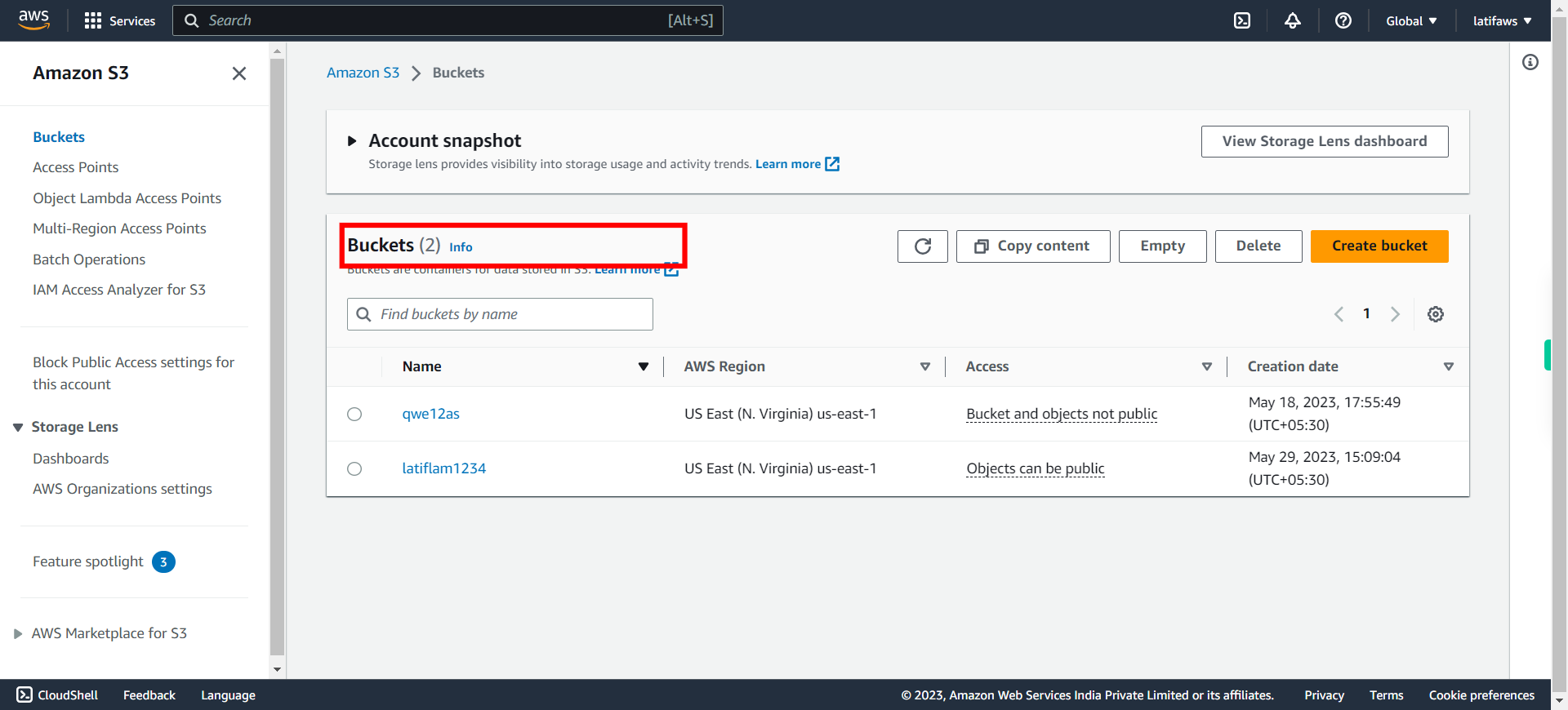

28.

**Select your S3 Bucket which was created for this scenario in Step1.** #### Press Next on instruction widget and then Open your Bucket

-

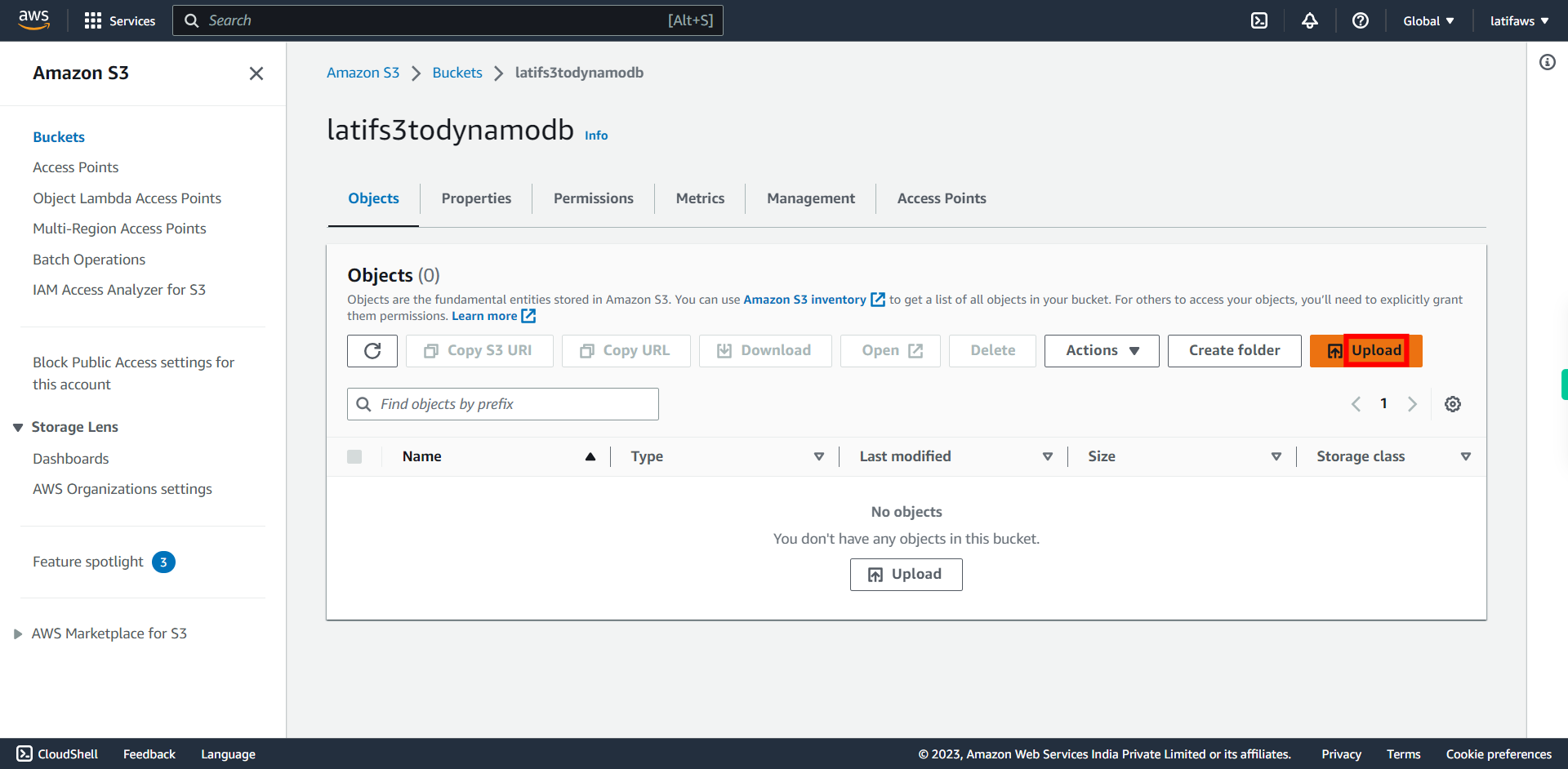

29.

Click "Upload"

-

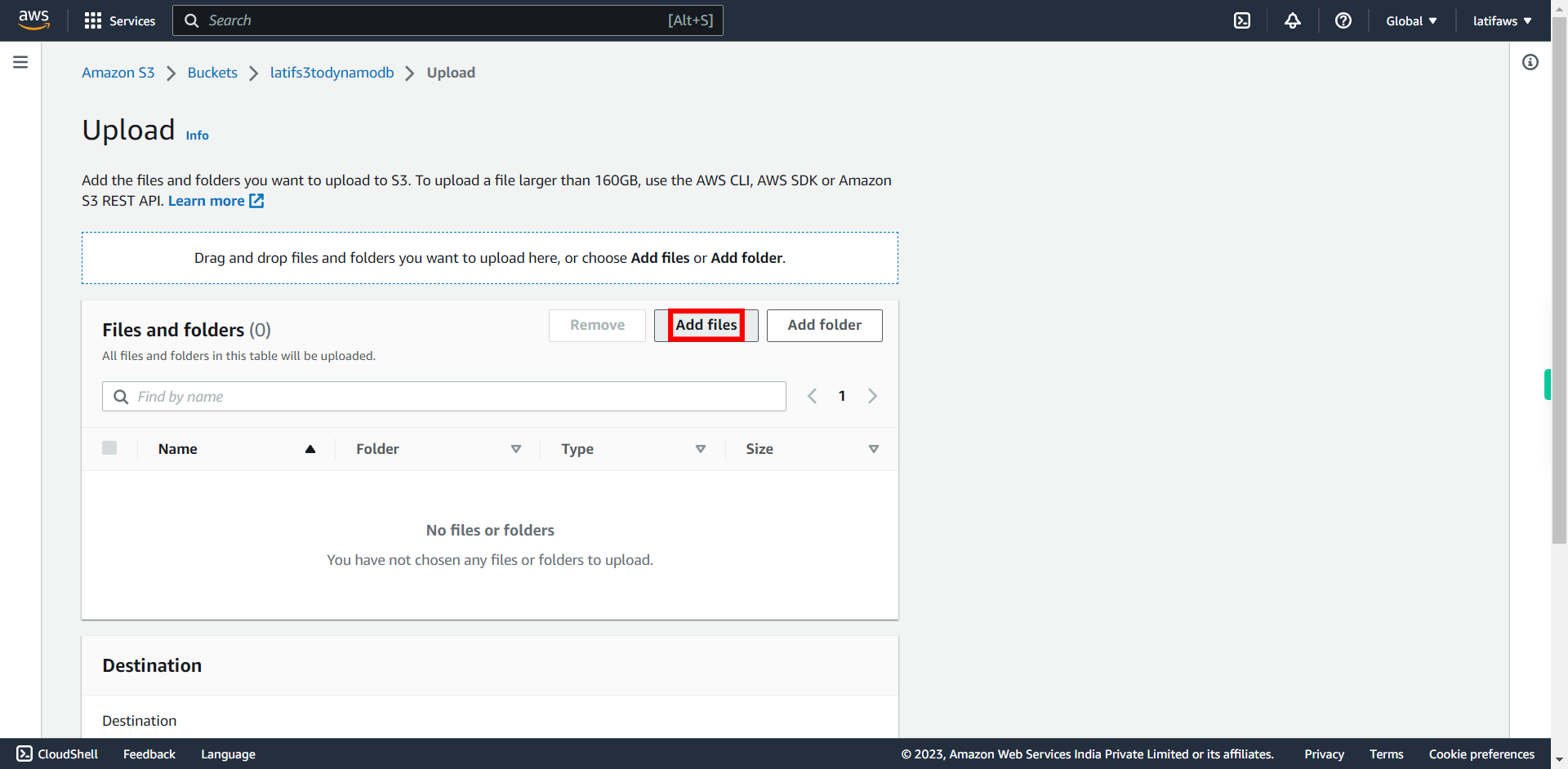

30.

Click "Add files" and add files from your System ### Upload the small size file (in KBs) Once done, click Next to continue.

-

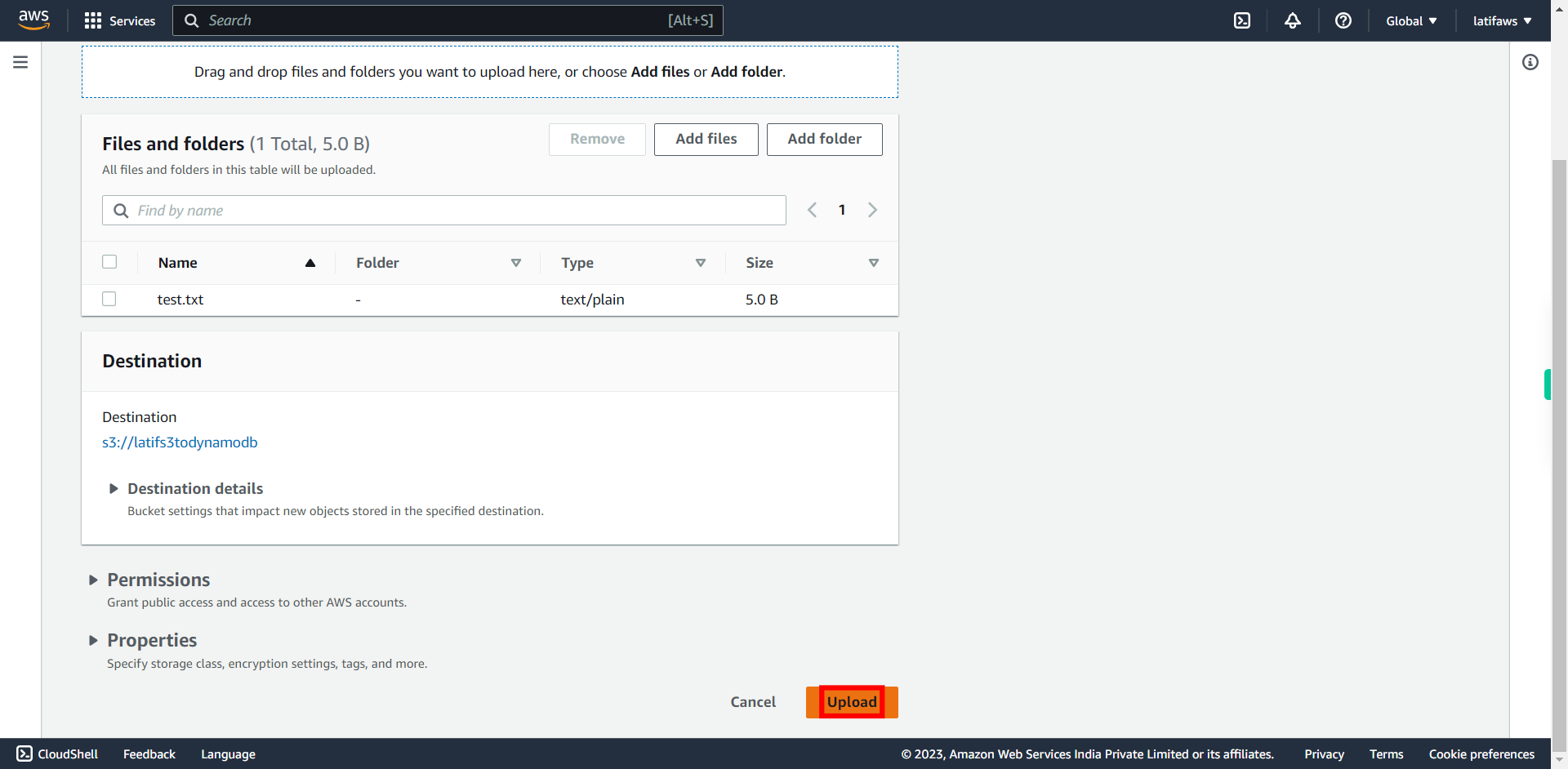

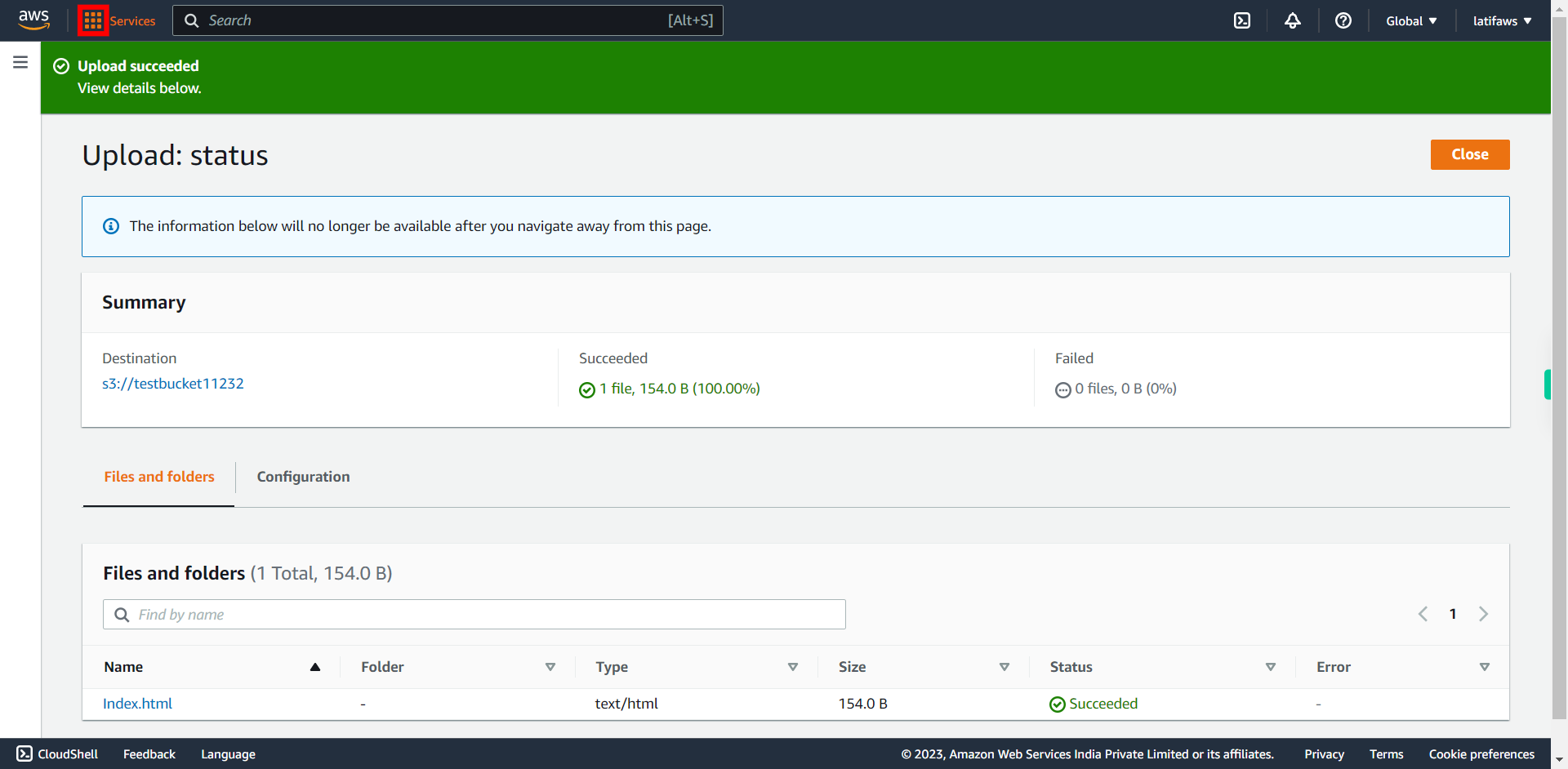

31.

Click "Upload". The file will be uploaded into your bucket. # Now you have to check whether the file metadata details are reflected in DynamoDB table or not.

-

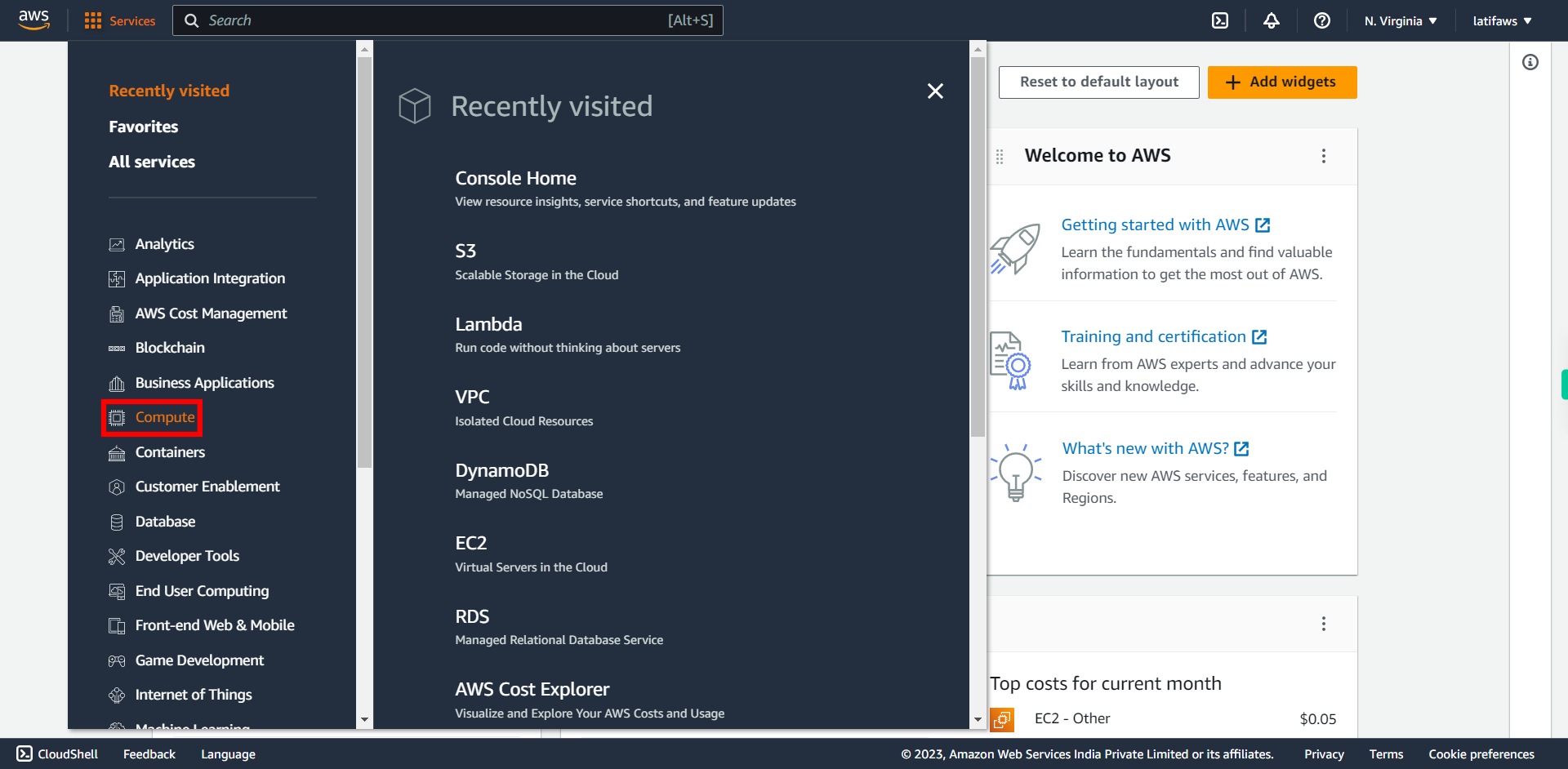

32.

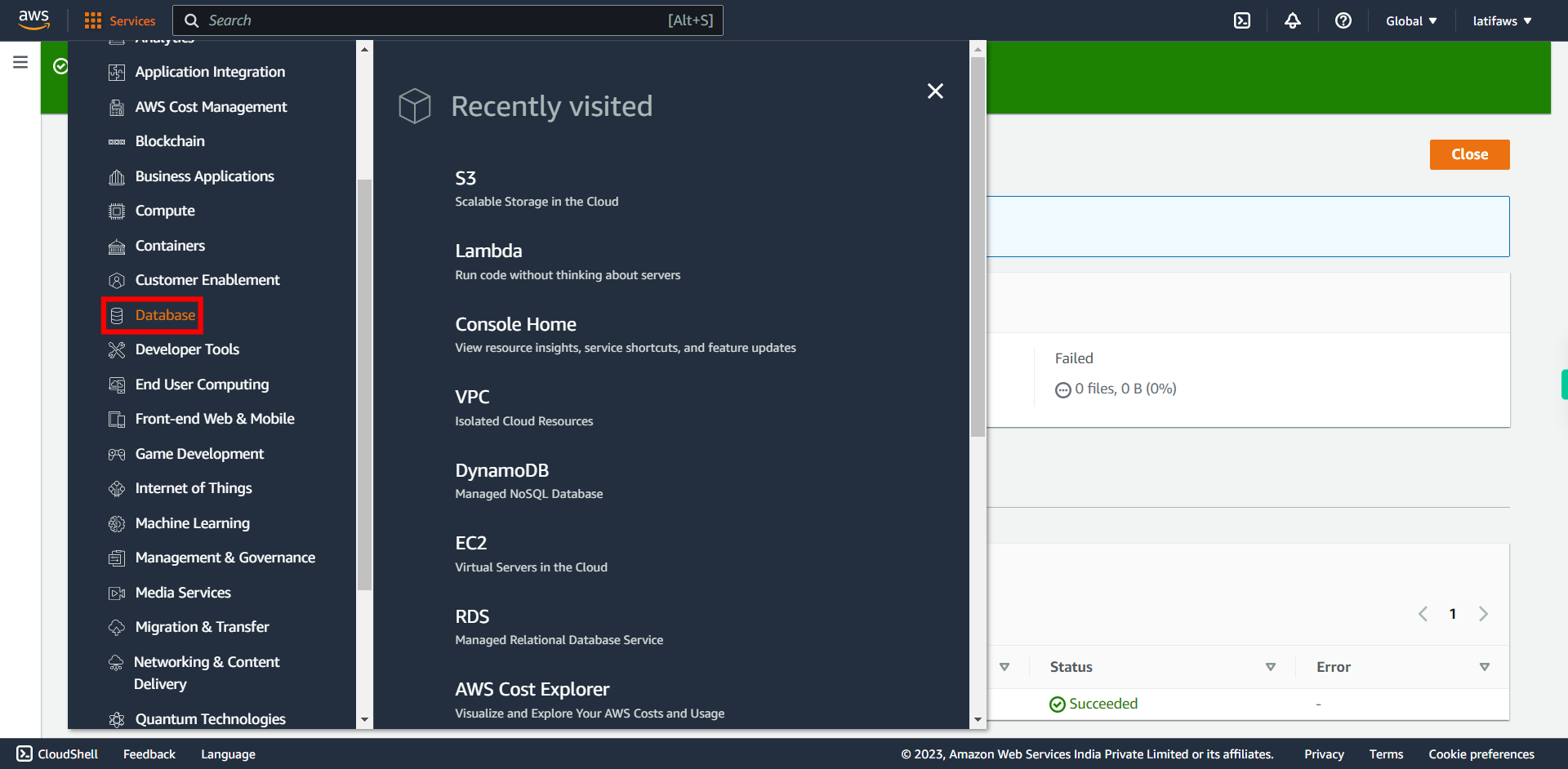

To verify the content of DynamoDB table for the file metadata details. Click "Services"

-

33.

Click "Database"

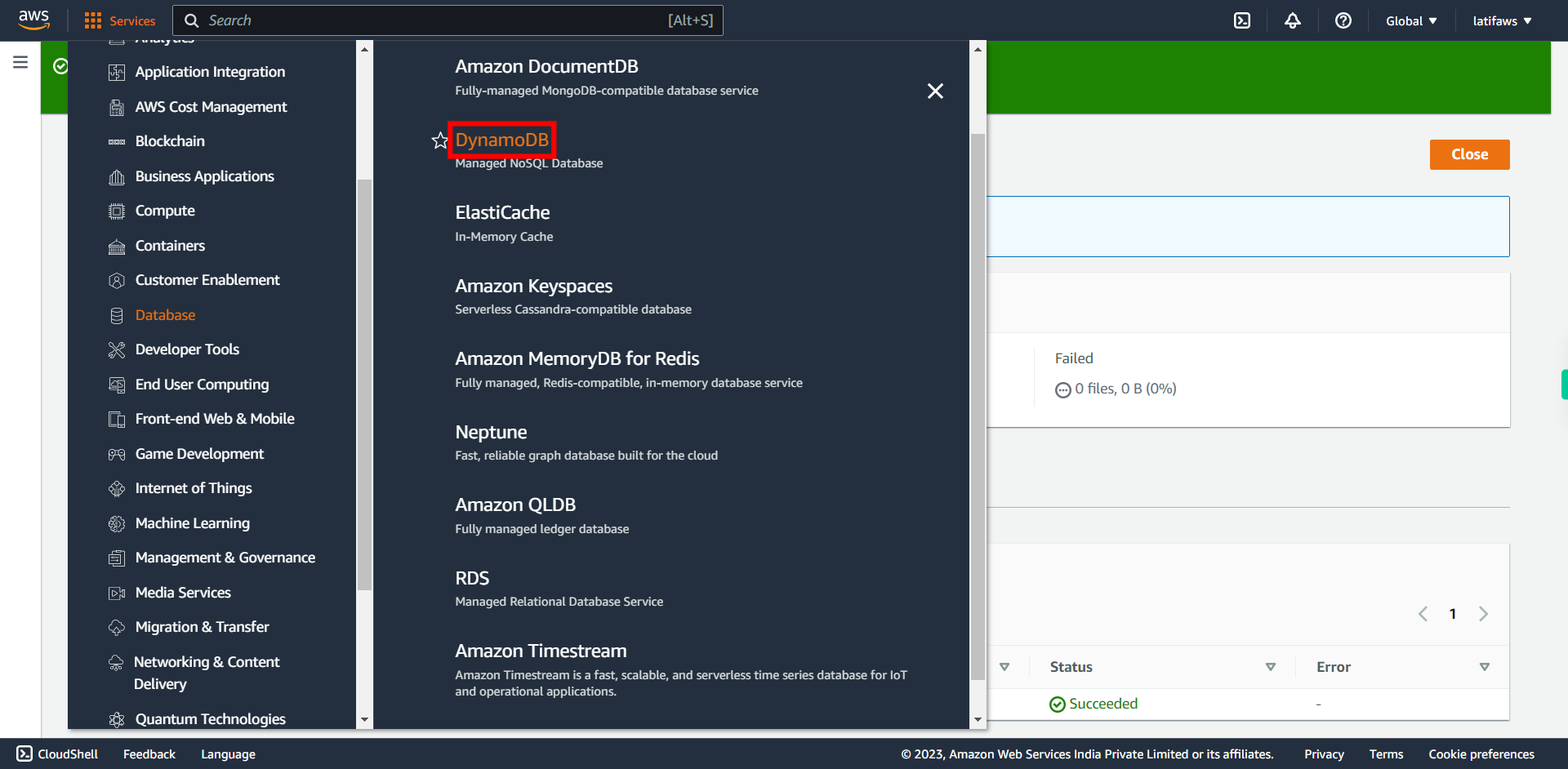

-

34.

Click "DynamoDB"

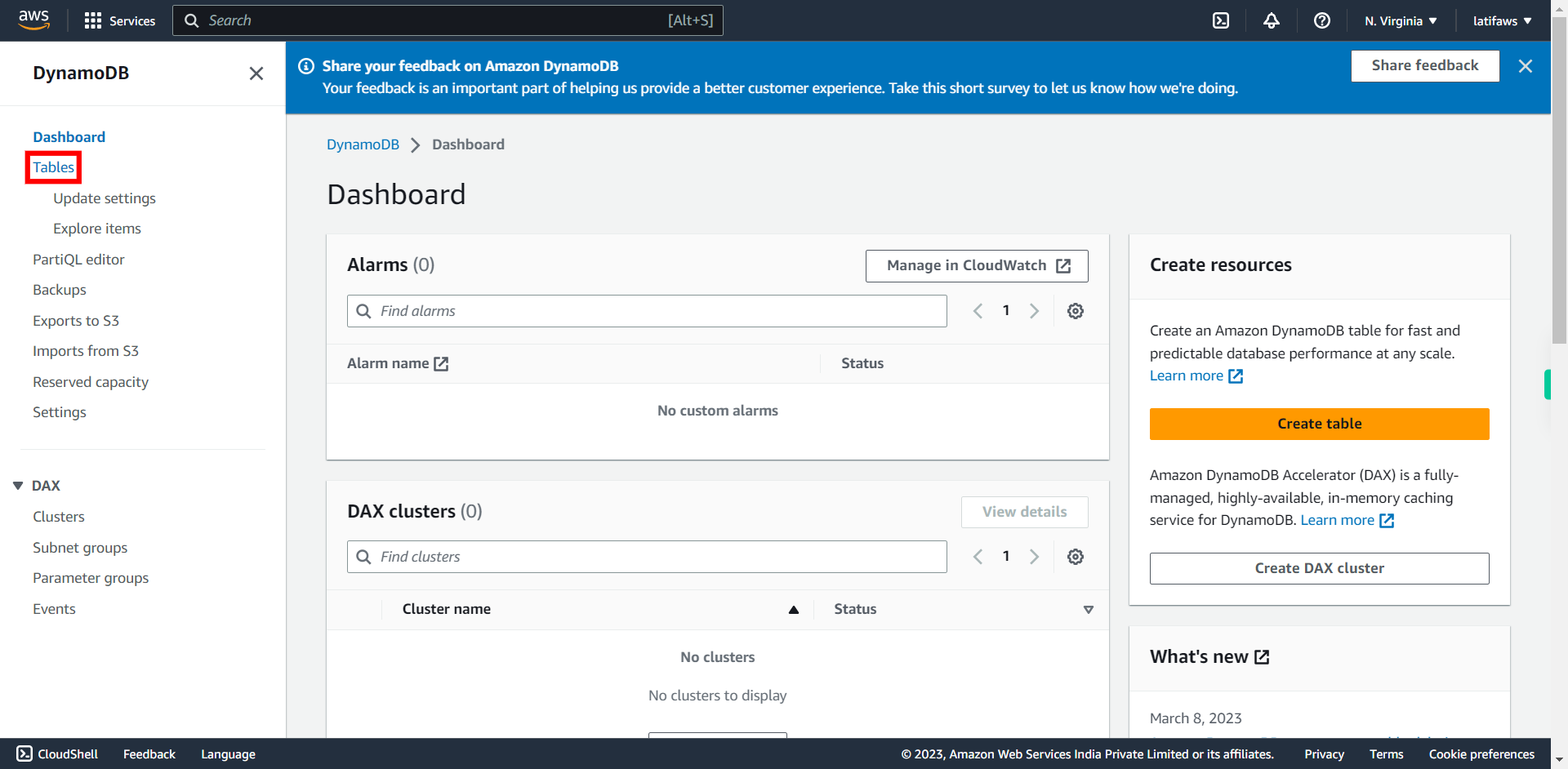

-

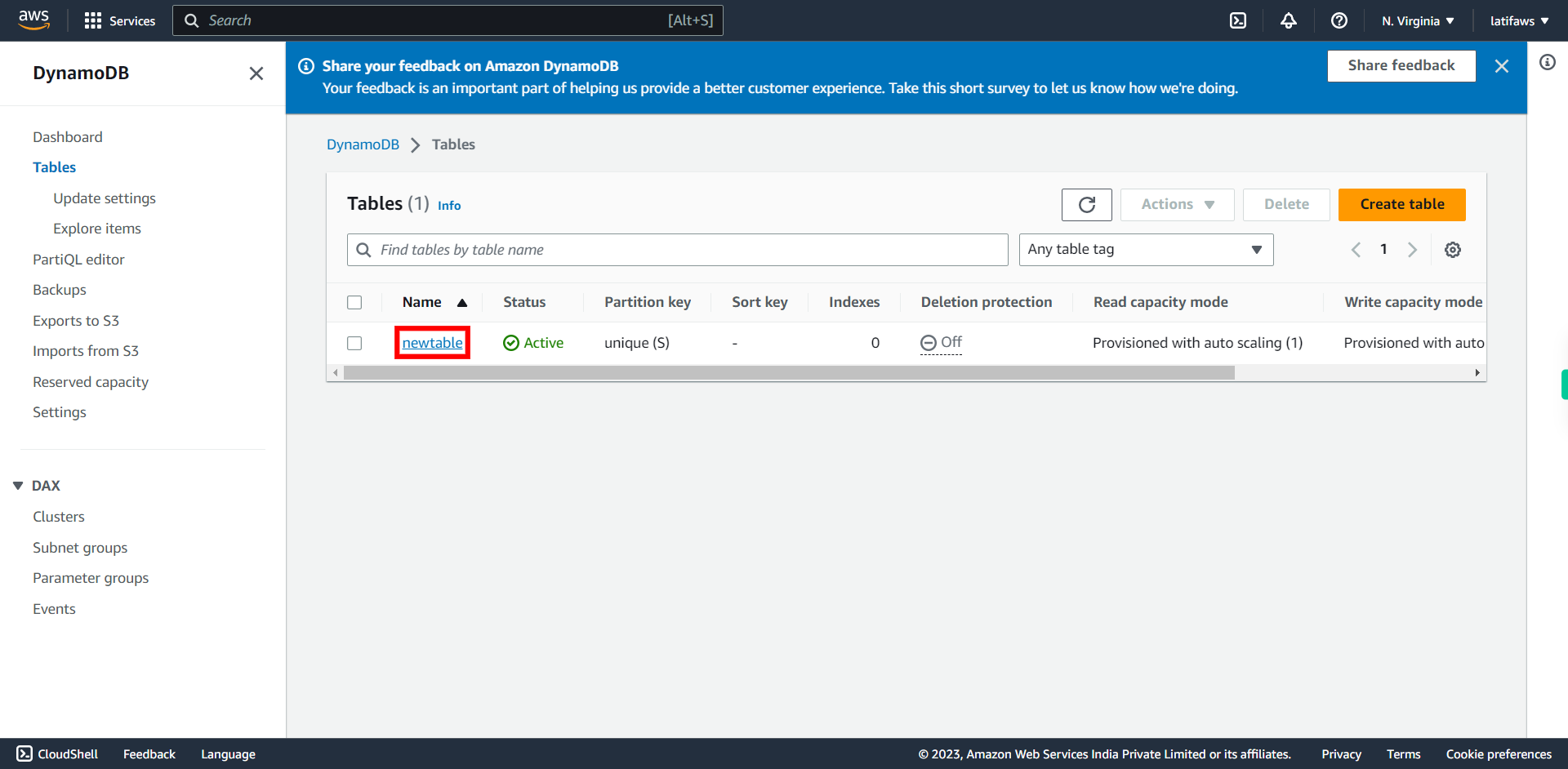

35.

Click "Tables"

-

36.

**Press Next in the Supervity instruction widget and Open your table upon filtering and clicking on its Name**

-

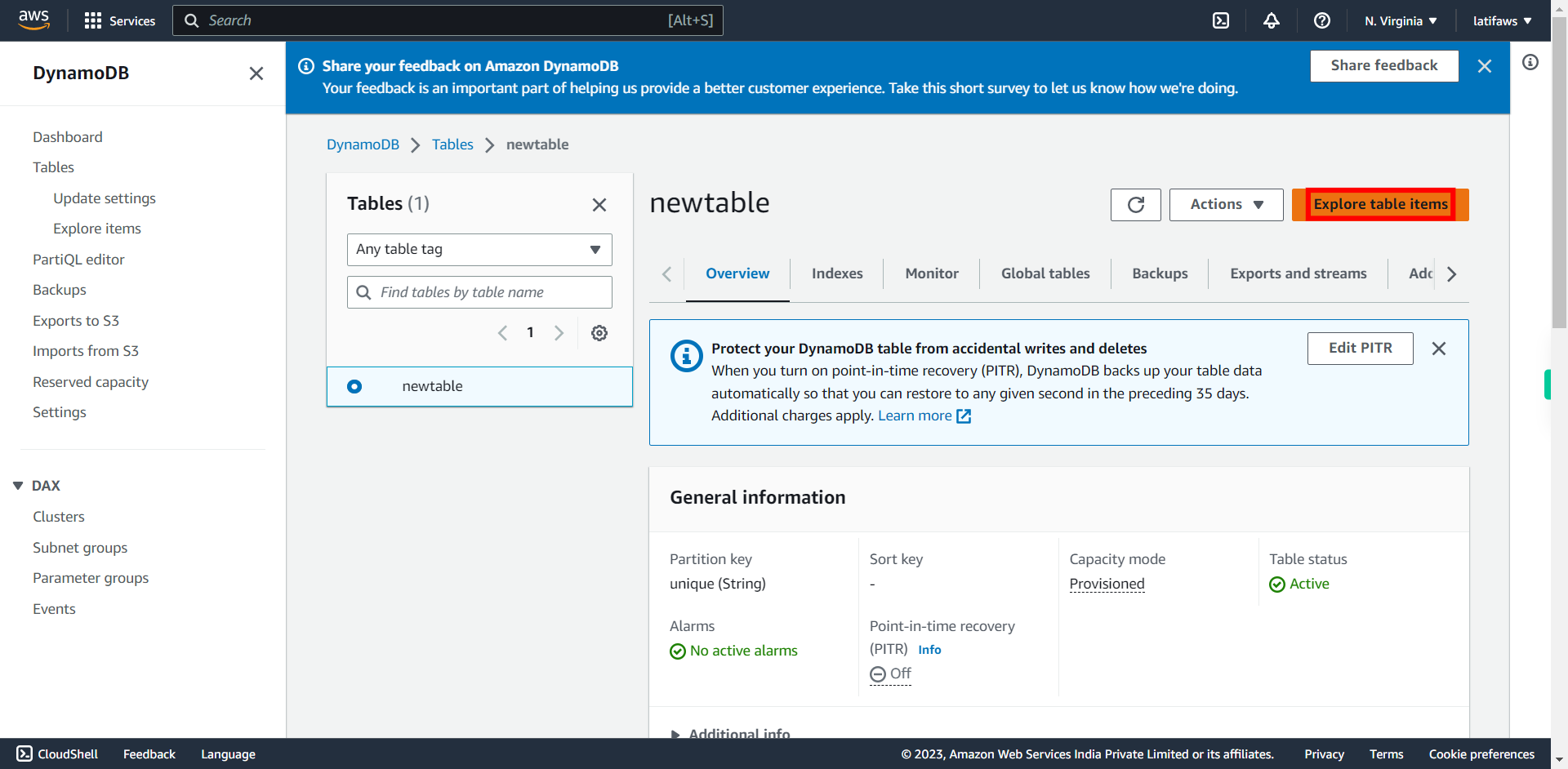

37.

Click "Explore table items"

-

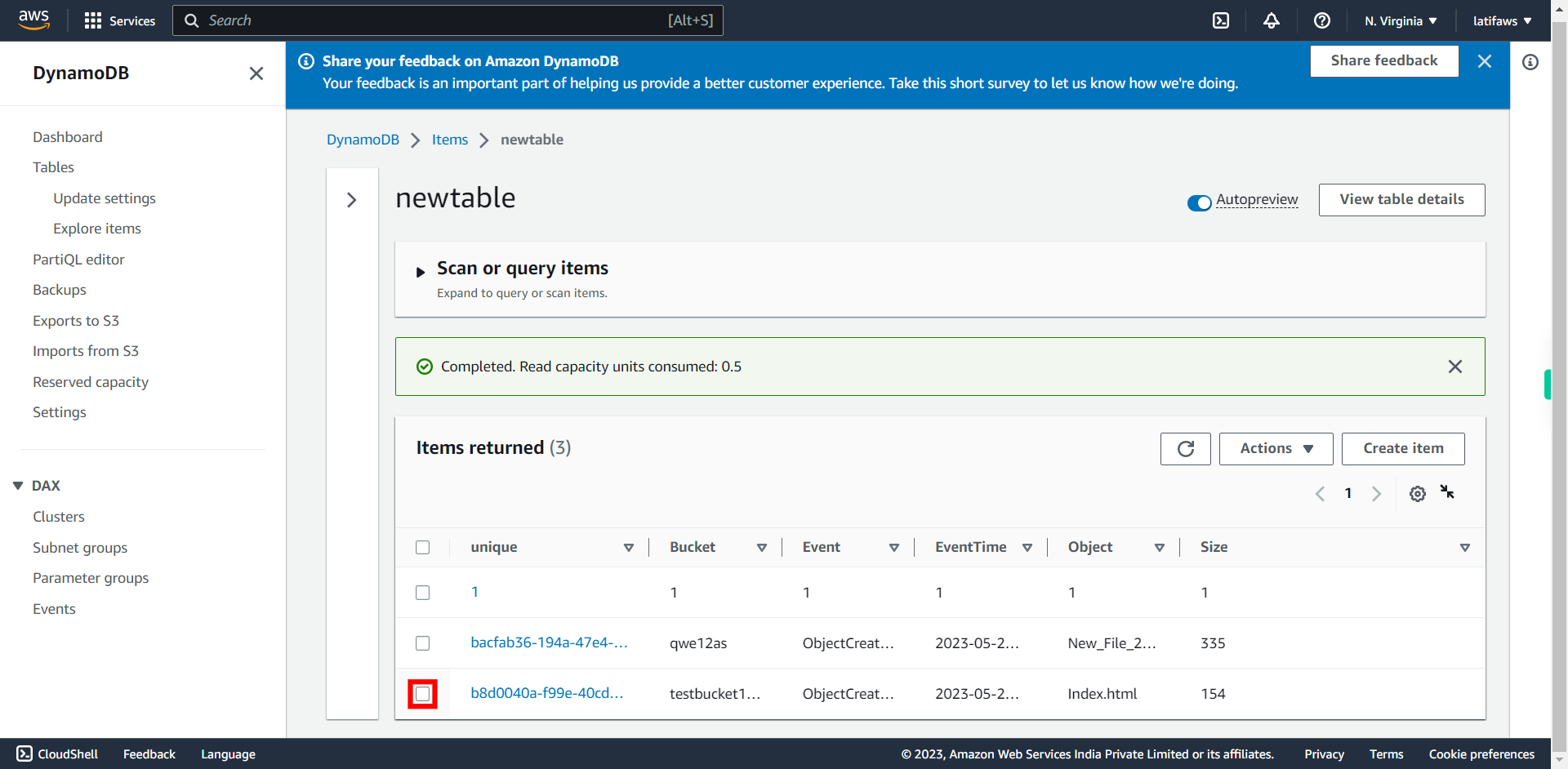

38.

Click to "expand to see table"

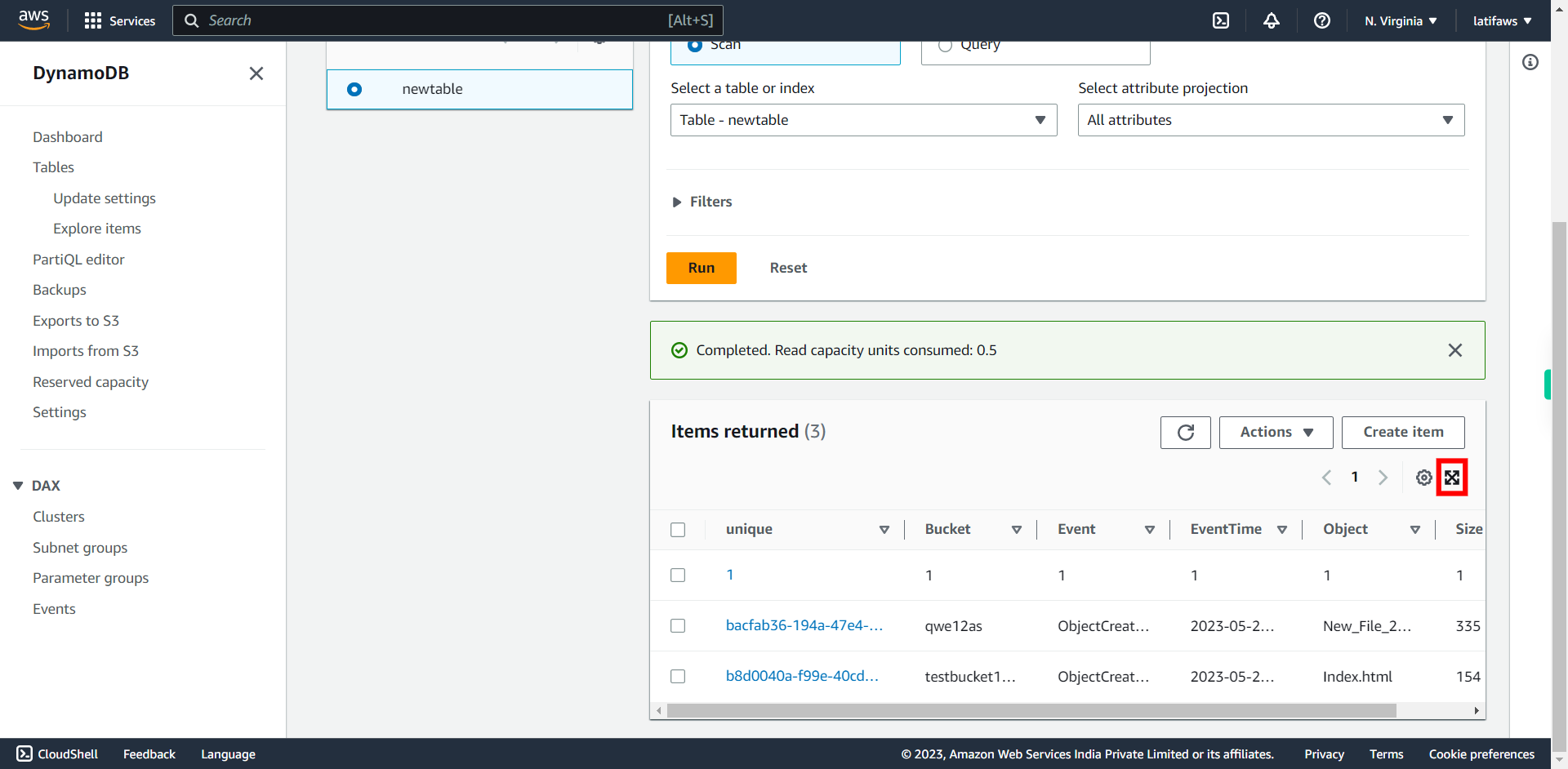

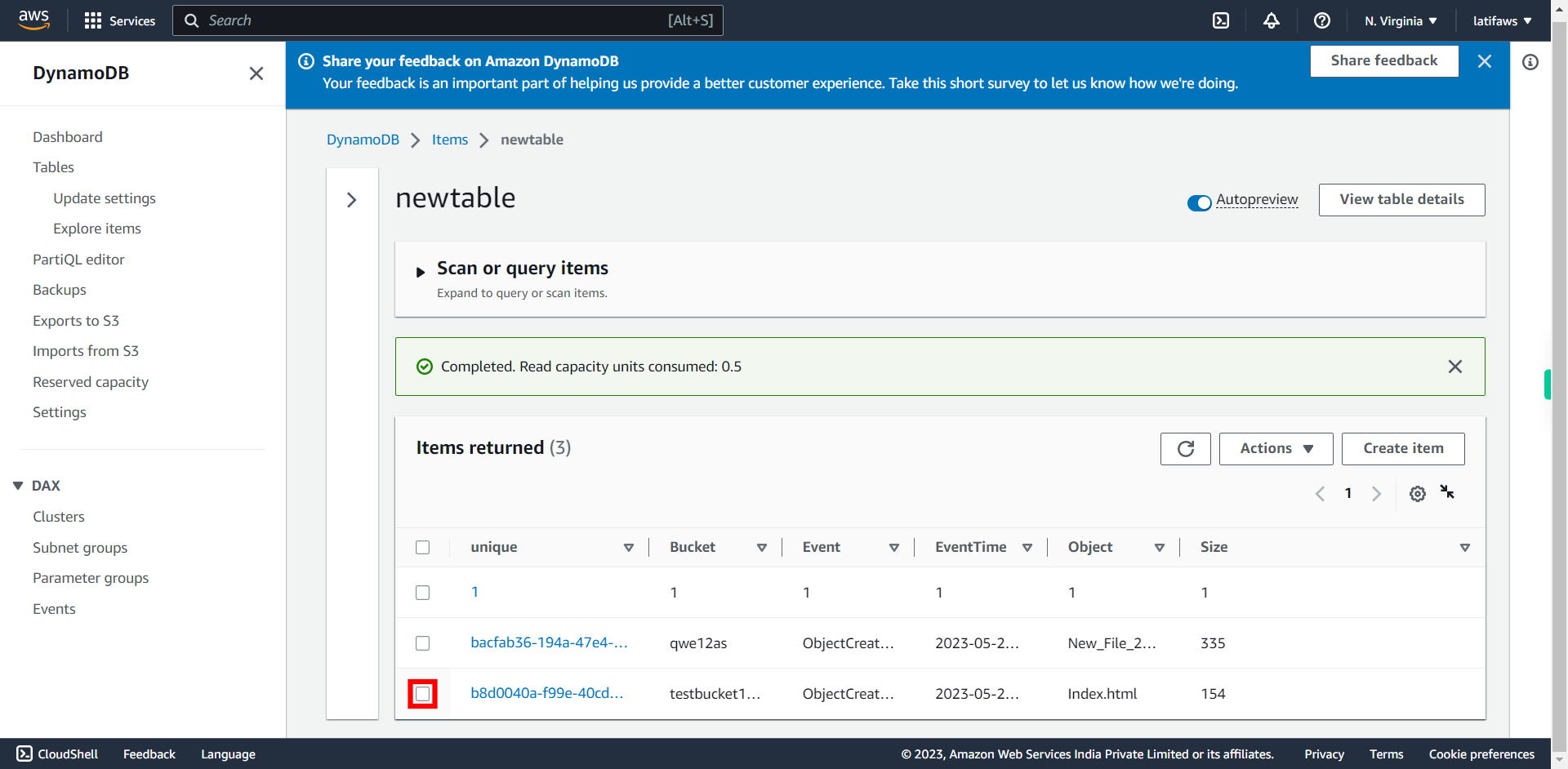

-

39.

Check the "Data in table which related to your file"

-

40.

If you see the metadata of the file that is uploaded, then the scenario is successfully completed.