Ever wonder how to make your LLM application lightning-fast and cost-effective? Look no further than semantic caching!

The field of user-facing machine learning applications has existed for over a decade, but open-ended large language models (LLMs) have only recently gained significant traction in the past year. Due to this relative newness, best practices for managing cost, latency, and accuracy in LLM-powered applications are still under development.

For developers building user-facing applications, minimizing latency is crucial. Users become frustrated when interactions are slow. Cost is another critical factor. LLM applications need to be financially sustainable – generative AI startups charging users a subscription fee wouldn’t be able to justify an LLM API cost exceeding that fee per user.

Fortunately, techniques like shortening prompts, caching responses, and utilizing more cost-effective models can often be used together. These methods can lead to both faster and cheaper generations.

2024 is shaping up to be the year when LLM applications transition from prototypes to production-ready and scalable solutions. At Supervity, we have implemented most of these methods and have seen amazing results.

Speed Up Your LLM App with Semantic Caching

Imagine powering your application with an LLM, but responses come instantly and at a reduced cost. That’s the magic of caching!

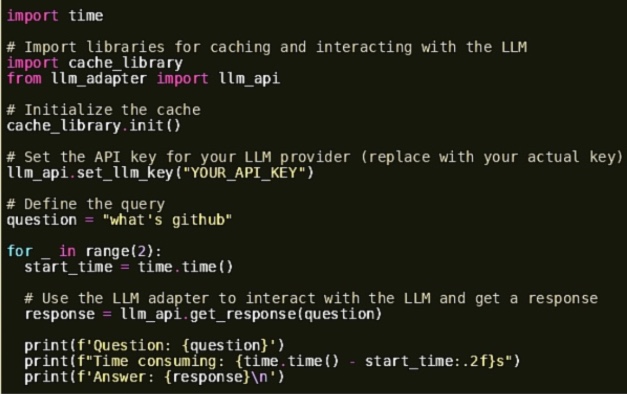

In the world of LLMs, caching involves storing prompts and their corresponding answers for future reference. This way, your app can retrieve these saved responses instead of relying on repeated LLM API calls.

Unlike traditional caching that relies on exact matches, semantic caching goes a step further. It stores prompts and their corresponding responses while also understanding the underlying meaning. This allows retrieved responses to be used for similar questions, even if phrased differently.

For instance, if a user asks, “What’s the capital of France?” and later asks “Tell me the capital city of France,” the cached response can be used for the second question, saving an unnecessary API call.

By implementing caching, you can create a smoother, more cost-effective user experience for your LLM-powered application.

Understanding Semantic Caching in detail

Understanding Semantic Caching in detail

Scenario: Imagine you’re building a chatbot powered by a large language model (LLM). Users frequently ask similar questions, like “What’s the weather in London today?” or “What are the capitals of European countries?”

Without Caching:

Every time a user asks a question, the LLM needs to process the query from scratch. This can be computationally expensive and lead to slow response times.

Repeated queries strain the LLM’s resources, potentially impacting overall performance.

With Caching:

The first time a user asks “What’s the weather in London today?”, the LLM retrieves the answer and stores it in the cache along with the query itself.

Subsequent users who ask the same question trigger a cache lookup first.

Upon matching a user’s query (e.g., ‘What’s the weather in London today?’) with an existing cache entry, the system retrieves the stored response, thereby significantly cutting down both response time and the workload on the LLM.

Benefits:

- Faster Responses: Cached responses are retrieved much quicker than processing new queries with the LLM, leading to a smoother user experience.

- Improved Efficiency: By reducing the load on the LLM, caching allows it to handle more complex queries or serve more users simultaneously.

- Reduced Costs: Depending on your LLM provider’s pricing model, fewer LLM calls can translate to lower operational costs.

Metrics:

The example mentioned metrics like cache hit ratio, latency, and recall. Let’s see how they help analyze the cache’s effectiveness:

- Cache Hit Ratio: This measures the percentage of times the cache provides a response, indicating how often you avoid processing new queries.

- Latency: This refers to the time taken to retrieve a response. In a well-functioning cache, retrieving a cached response should be significantly faster than processing a new query.

- Recall: This metric tells us how likely the cache is to have the answer for a given query. A high recall indicates the cache is effectively capturing commonly asked questions.

By monitoring these metrics, you can fine-tune your caching system for optimal performance.

Taming Long-Winded Conversations: Techniques for Efficient LLM Interactions

Large language models (LLMs) are finding their way into various applications, including chatbots and virtual assistants. These applications often involve multi-turn conversations, where each back-and-forth exchange adds to the overall length. A seemingly brief conversation can quickly balloon into a lengthy dialogue filled with repetitive elements.

This presents a challenge: how can we ensure LLMs process these extended interactions efficiently?

Imagine an AI-powered virtual assistant handling phone calls. Each back-and-forth exchange between the caller and the AI adds to the conversation history. A seemingly brief two-minute call can easily transform into a text transcript hundreds of words long.

Summarization can help us tackle these challenges. Summarization involves condensing lengthy conversations into shorter, more concise versions that capture the essential information.

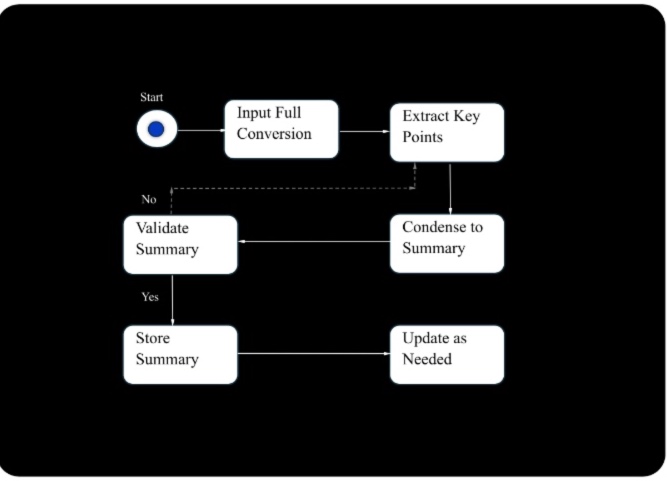

Summarization Workflow

Input Full Conversation → 2. Extract Key Points → 3. Condense to Summary → 4. Validate Summary → 5. Store Summary → 6. Update as Needed